Agalia Tan

@agaaalia

Senior Strategist at We Are Social

RADAR Chapter Lead for Singapore

Agalia Tan

@agaaalia

Senior Strategist at We Are Social

RADAR Chapter Lead for Singapore

social media in 2026 and beyond

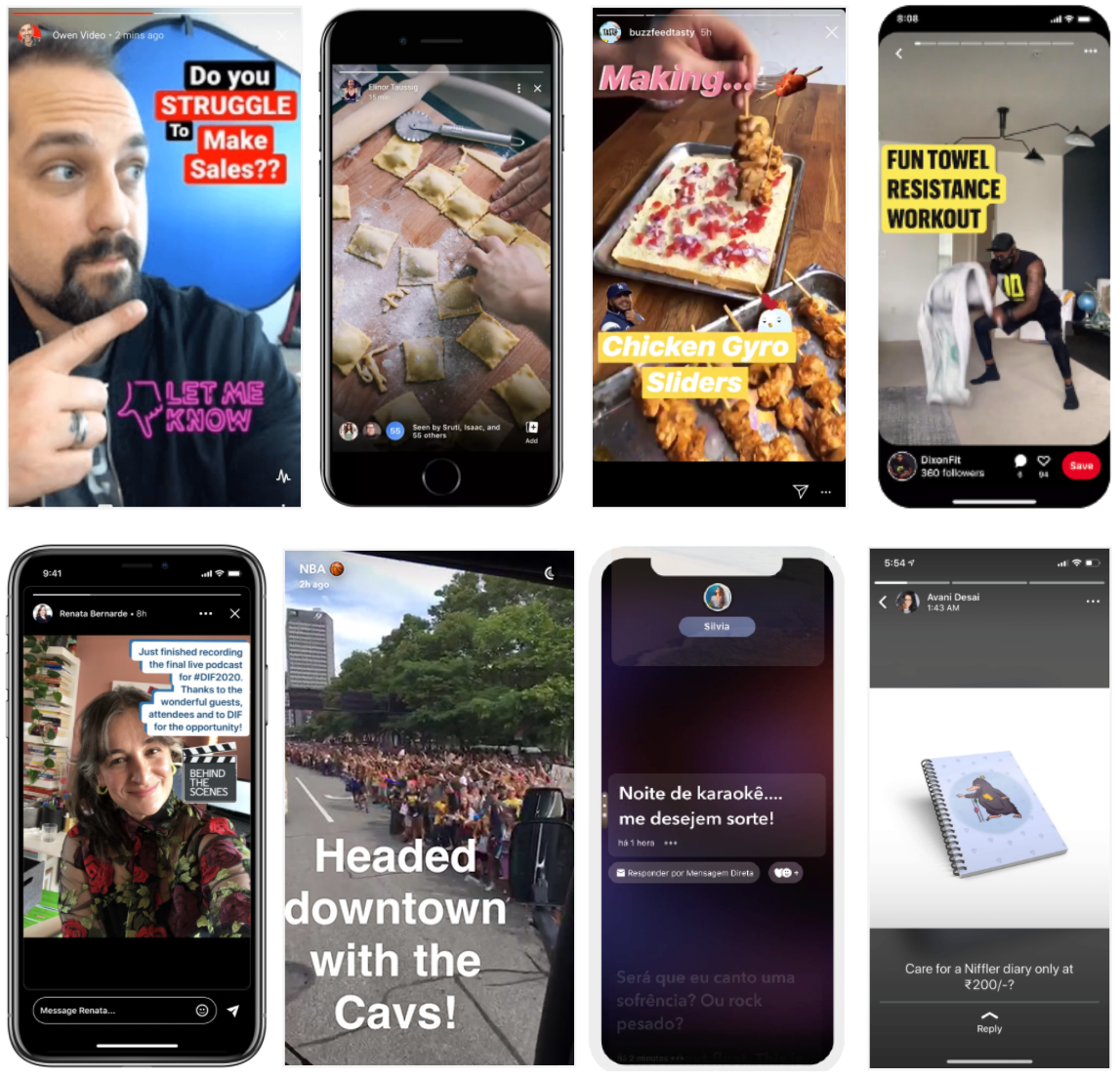

social as a funnel to IRL, to the messiness of life

social media in 2026 and beyond

on tiktok specifically, paralleling to channel surfing which is spot on with the thing around how social has become television