Sublime

An inspiration engine for ideas

Zuck summarised the needs for LLMs in enterprise well: https://t.co/Pe5rs4AUX1

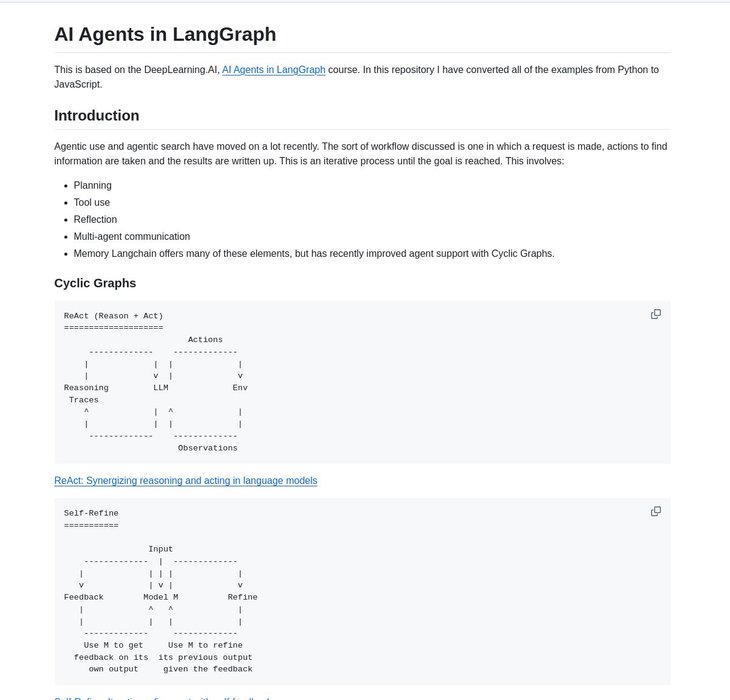

🤖⚡ JavaScript AI Agents

JavaScript implementation of https://t.co/Ry28jeeGzn's AI Agents in LangGraph course brings advanced agent architectures to JS developers. Features multi-agent systems, human-in-the-loop integration, and complex workflows powered by LangGraph's cyclic graphs.

Explore... See more

How Machine Learning Works And now, here’s the intuitive, elegant answer to the big dilemma, the next step of learning that will move beyond univariate to multivariate predictive modeling, guided by both positive and negative cases: Keep going. So far, we’ve established two risk groups. Next, in the low-risk group, find another factor that best

... See moreEric Siegel • Predictive Analytics

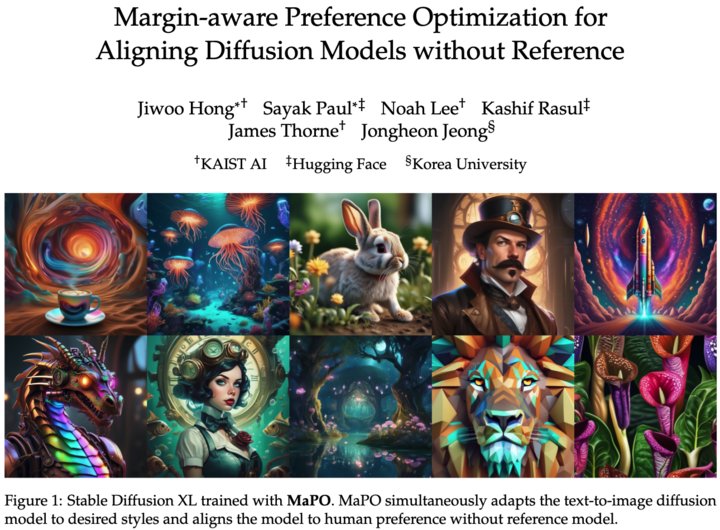

Introducing MaPO, a memory-efficient technique for aligning T2I diffusion models on preference data 🔥

We eliminate the need to have a reference model when performing alignment fine-tuning.

Code, models, datasets, and paper are up... See more

Good math based ideas 💡 will always outperform subjective and contrived approaches.

Conformal Prediction has been developed based on the ideas from Kolmogorov’s complexity in conversations between Vovk (last PhD student of Kolmogorov) and Kolmogorov himself. https://t.co/8l6zJFBpXr

Distilling the Knowledge in a Neural Network #NIPS2014

By Geoffrey Hinton @OriolVinyalsML @jeffdean

On transferring knowledge from an ensemble or

from a large highly regularized model into a smaller, distilled model

https://t.co/PHklruxCUC

ML Review 💙💛x.com

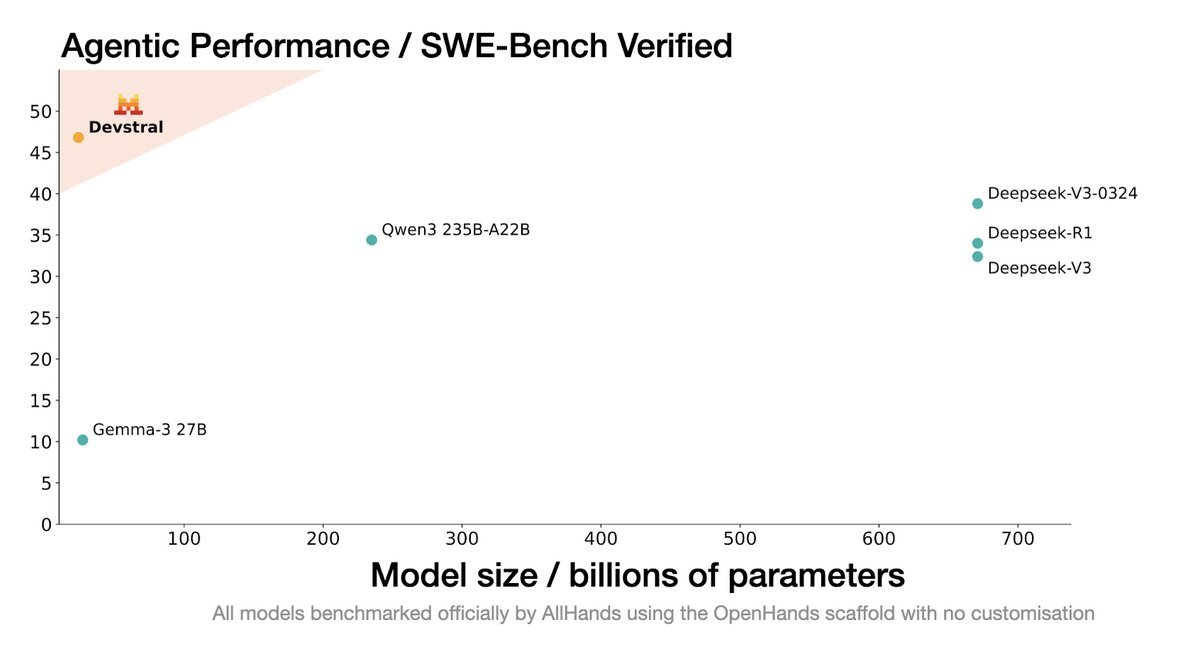

Meet Devstral, our SOTA open model designed specifically for coding agents and developed with @allhands_ai

https://t.co/LwDJ04zapf https://t.co/Mm4lYZobGO