Sublime

An inspiration engine for ideas

Your team in 2025 trying to A/B‑test a new onboarding tooltip:

Cross‑Functional OKR Sync

3 notion pages and a Miro

run a pre-mortem

"let's push this to q3"

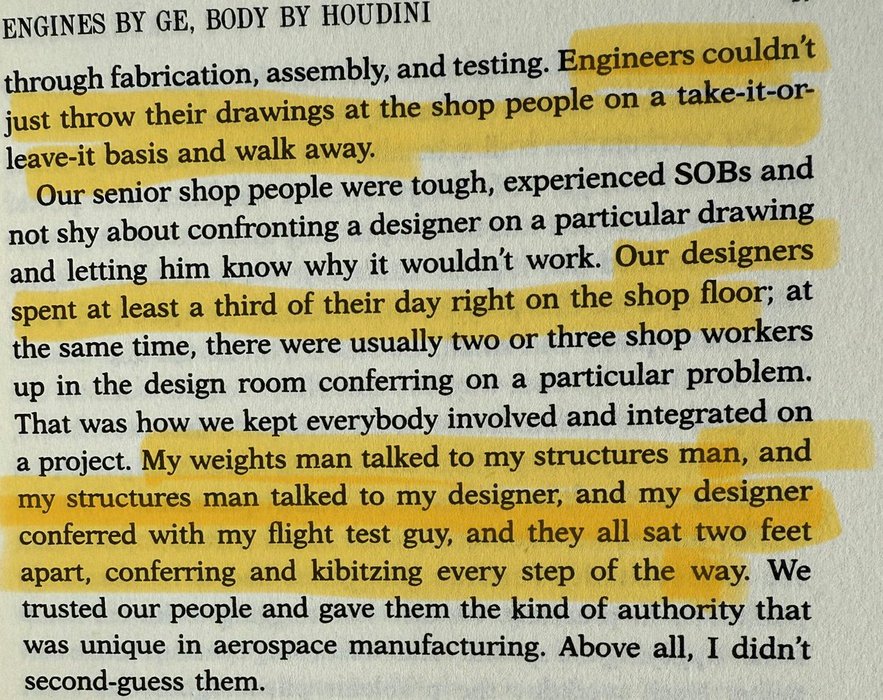

Building stealth warplanes in 1976: https://t.co/WvUItmgfHc

2501.09223v1

The text outlines foundational concepts of large language models, focusing on pre-training methods, model architectures, generative models, prompting strategies, and alignment techniques in natural language processing.

LinkGemini Pretraining

It discusses the scaling of large language models, focusing on compute budgets, scaling laws, inference efficiency, and implications for training and model size optimization in various applications.

vladfeinberg.comthe perplexity brand feels alive and fresh because we don't have firm guidelines for our designers. they have creative latitude and aren't made to suffer the indignities of stifling product and design reviews.

this freedom is the critical ingredient to attracting top .1%

Dmitry Shevelenkox.com

Altman is pathetic. First, he says, "We are now confident we know how to build AGI as we have traditionally understood it," then "invents" dumb reminders, dumb "memory" in ChatGPT, dumb search hoping to steal from Google, and now a dumb social network hoping to steal from Musk. https://t.co/2rvtku2bgp

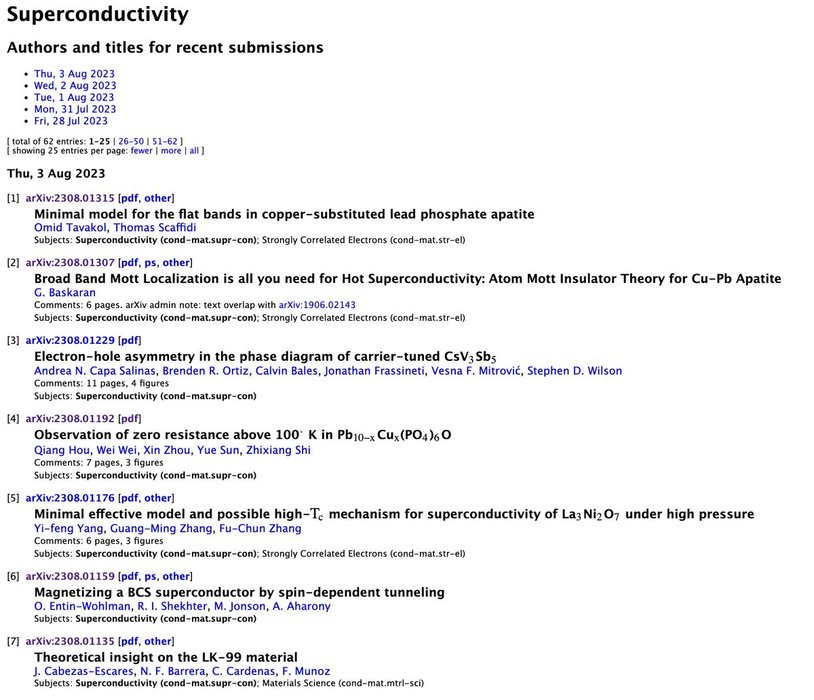

If you think twitter is blowing up over #LK99, you should see arXiv! https://t.co/3hKfqTNoOE

Alex

@chernikov