AI

We can also imagine interfaces that support iterative shaping: “More like this, less like that.” The commonality is that these systems take as a given that initial prompts are often just the starting point for a shared search process.

We don’t need humans to become hyper-specific, up-front planners. We need systems that meet us where we are: in the ... See more

We don’t need humans to become hyper-specific, up-front planners. We need systems that meet us where we are: in the ... See more

🌀🗞 The FLUX Review, Ep. 190

tl;dr: Rather than writing boilerplate code (which AI now handles), juniors must focus on higher-level skills like debugging, system design, and effective collaboration. Companies that cut junior positions entirely risk their future talent pipeline. The most successful junior developers will use AI as a learning tool rather than a crutch, verifying... See more

AI Won't Kill Junior Devs - But Your Hiring Strategy Might

Why? Well, here’s how a seasoned fact-checker would look at this page. They would notice that the overwhelming consensus is that it is asbestos, but that there is a TikTok video as the very last result that mentions a specific person (Stillman) and a specific alternate theory (gypsum). They would then track that down. Part of their understanding is... See more

Yes, LLMs Can Be Better at Search Than Traditional Search

High specificity (even though lower quality) - if cross-checks lead to high quality - you are on productive path, if cross-checks lead to low quality sources - not right path.

They look like software, but they act like people. And just like people, you can’t just hire someone and pop them on a seat, you have to train them. And create systems around them to make the outputs verifiable.

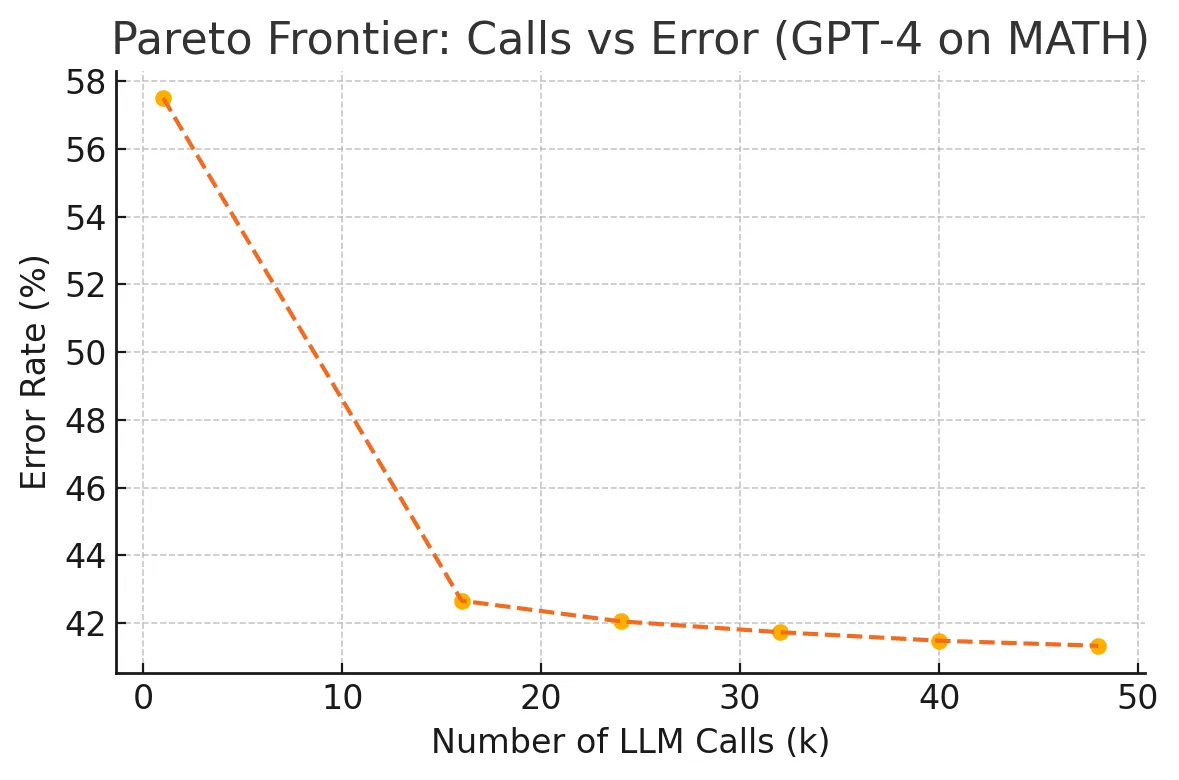

Which means there’s a pareto frontier of the number of LLM calls you’ll need ot make for verification and the error-rate each LLM introduce... See more

Which means there’s a pareto frontier of the number of LLM calls you’ll need ot make for verification and the error-rate each LLM introduce... See more

Rohit Krishnan • Working with LLMs: A Few Lessons

This means you have to add evaluation frameworks, human-in-the-loop processes, designing for graceful failure, using LLMs for probabilistic guidance rather than deterministic answers, or all of the above, and hope they catch most of what you care about, but know things will still slip through.

Working with LLMs: A Few Lessons

LLMs inherently are probabilistic. This is unlike code that we’re used to running before. That’s why using an LLM can be so cool, because they can do different things.

https://mikecaulfield.substack.com/p/minor-sift-toolbox-for-claude-37?open=false#%C2%A7instructions-text

# Fact-Checking and Historical Analysis Instructions (Full Check)

## Overview

You are designed to act as a meticulous fact-checking assistant that analyzes claims about historical events, images, or artifacts, then responds with a comprehensive, st

... See moreThe entire tech community is under the impression that AI coding will result in power flowing from engineers to “idea guys.”

Wrong—it will always flow to whatever still has scarcity: those who know how to get distribution

Nikita Bierx.comThat’s what Treasure Map AI does. It curates to elevate what matters and exclude what doesn’t. It empowers you with better judgement. And once you see that it works, it leaves you curious for more.