AI

Much has been made of next-token prediction, the hamster wheel at the heart of everything. (Has a simpler mechanism ever attracted richer investments?) But, to predict the next token, a model needs a probable word, a likely sentence, a virtual reason — a beam running out into the darkness. This ghostly superstructure, which informs every next-token prediction, is the model, the thing that grows on the trellis of code; I contend it is a map of potential reasons.

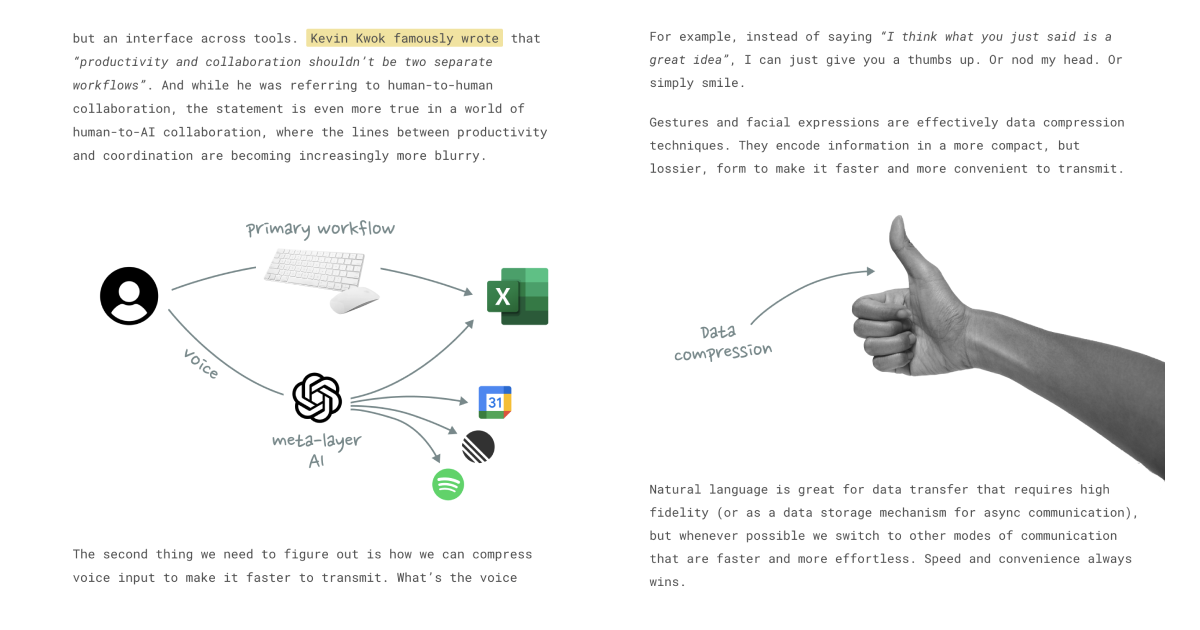

In this view, the emergence of super-capable new models is less about reasoning and more about “reasons-ing”: modeling the different things humans can want, along with the different ways they can pursue them … in writing.

Reasons-ing, not reasoning.

We Did the Math on AI’s Energy Footprint. Here’s the Story You Haven’t Heard.

technologyreview.comtechnologyreview.comBut here’s the problem: These estimates don’t capture the near future of how we’ll use AI. In that future, we won’t simply ping AI models with a question or two throughout the day, or have them generate a photo. Instead, leading labs are racing us toward a world where AI “agents” perform tasks for us without our supervising their every move.

The best post on the ethics of AI I’ve read. Robin is a master of words, and has a perspective on AI that is sorely needed.