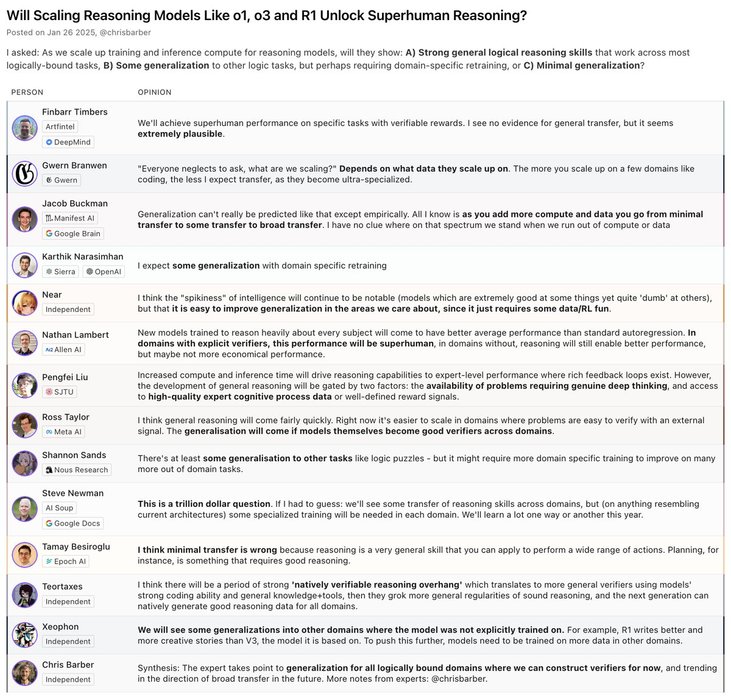

Will scaling reasoning models like o1, o3 and R1 unlock superhuman reasoning?

I asked Gwern + former OpenAI/DeepMind researchers.

Warning: long post.

As we scale up training and inference compute for reasoning models, will they show:

A) Strong... See more

something obviously true to me that nobody believes:

90% of frontier ai research is already on arxiv, x, or company blog posts.

q* is just STaR

search is just GoT/MCTS

continuous learning is clever graph retrieval

+1 oom efficiency gains in... See more

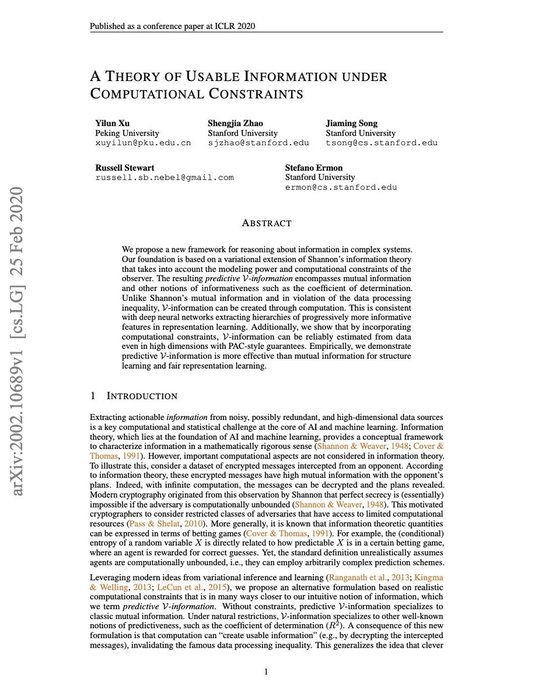

# A new type of information theory

this paper is not super well-known but has changed my opinion of how deep learning works more than almost anything else

it says that we should measure the amount of information available in some representation based on how *extractable* it is, given finite... See more

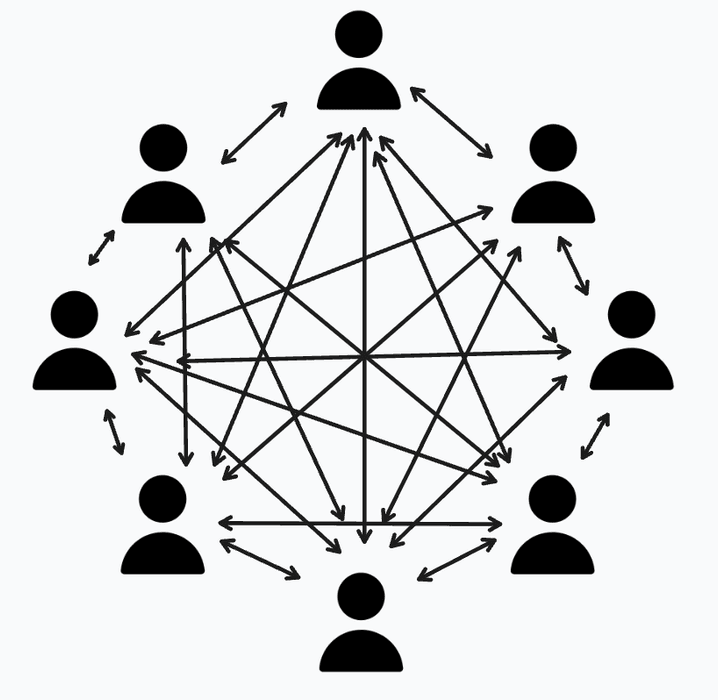

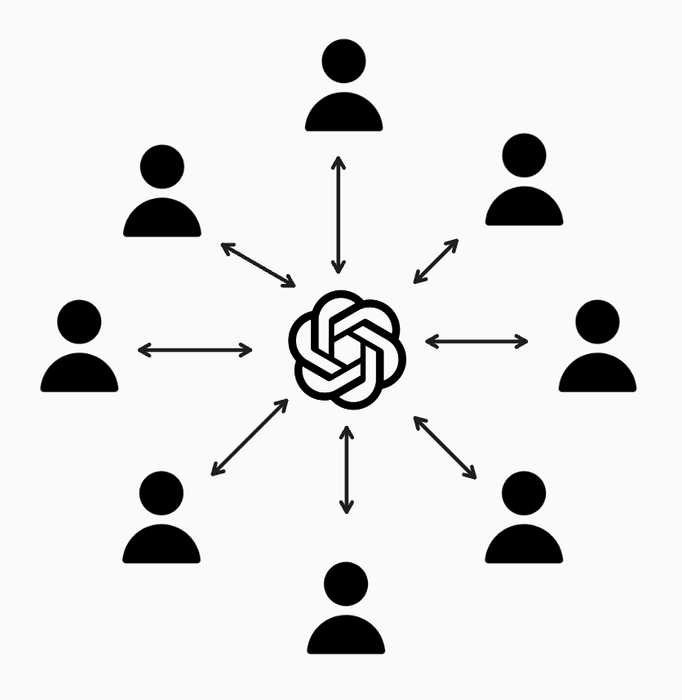

I see almost 0 explorations on one of the AI use cases I'm most excited about: using AI to help humans coordinate.

The coordination costs of human groups notoriously scales quadratically: the number of potential pair-wise interactions is roughly the square of number of people in a group.... See more

Few things pretty obvious to a few AI researchers but that most don't want to believe:

1. 90% of the most impactful AI research is already on arxiv, x, or company blog posts

2. q* aka strawberry = STaR (self-taught reasoners) with dynamic self-discover + something like DSPy for RAG... See more

Ted Werbelx.com