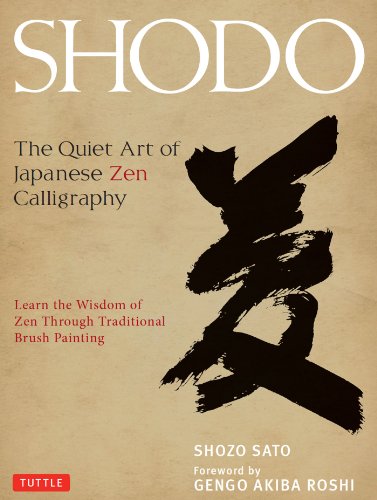

Shodo: The Quiet Art of Japanese Zen Calligraphy, Learn the Wisdom of Zen Through Traditional Brush Painting

Sato,Shozo • 3 highlights

amazon.com

respected Tanchiu Koji Terayama, director of Hitsu Zendo. The English translation of his book’s title is Zen and the Art of Calligraphy (transl. by John Stevens; Penguin Group, 1983).

Sato,Shozo • Shodo: The Quiet Art of Japanese Zen Calligraphy, Learn the Wisdom of Zen Through Traditional Brush Painting

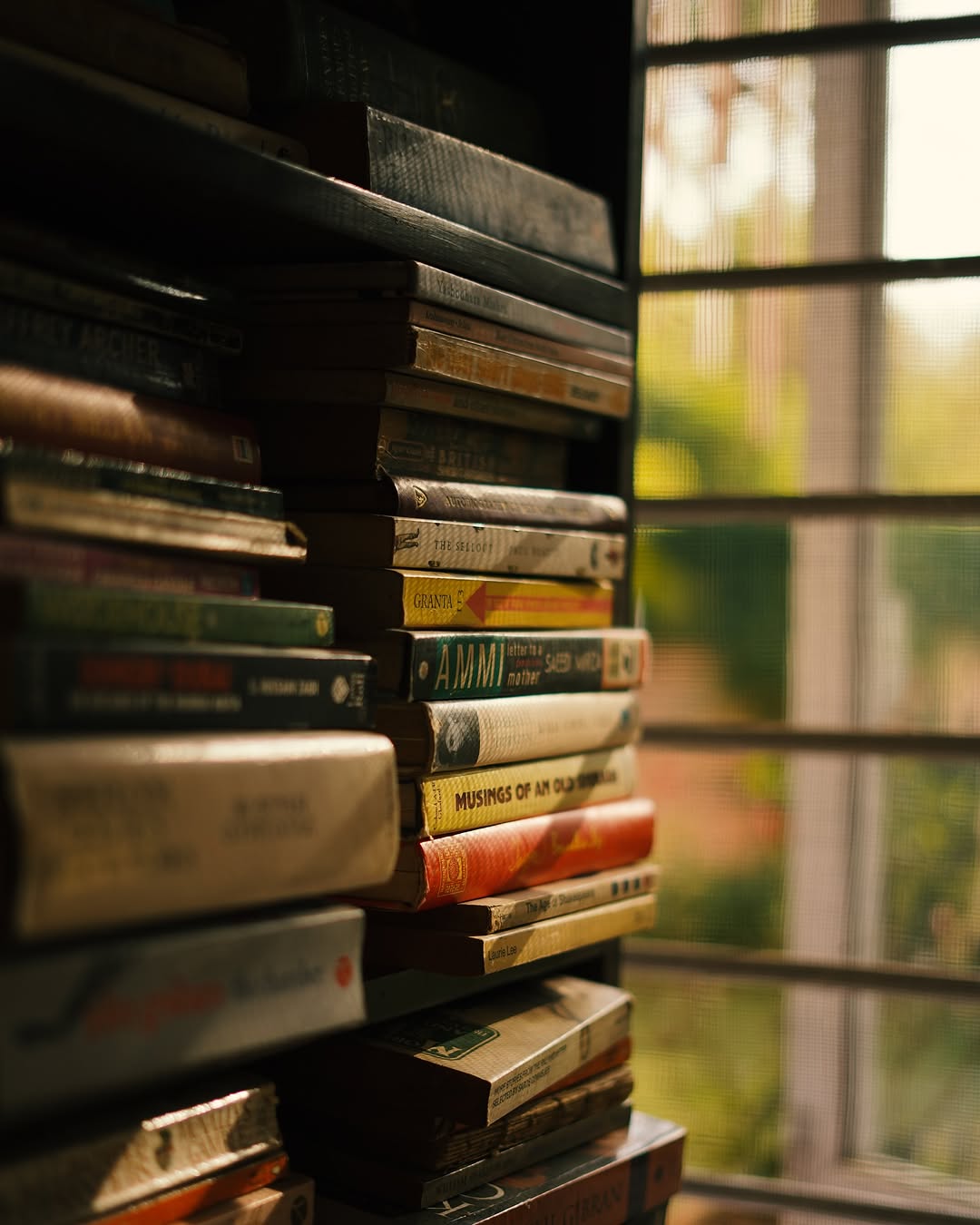

I love capturing the simple things, the quiet moments that often go unnoticed. There’s so much beauty in the everyday, in the details that surround us. It reminds me to slow down, look closer, and appreciate life’s little moments. 💛

#SimpleJoys #EverydayBeauty #home #delhi #sodelhi #delhigram #photographers_of_india... See more

instagram.com