Understanding LLMs from Scratch Using Middle School Math

"Understanding LLMs from Scratch Using Middle School Math"

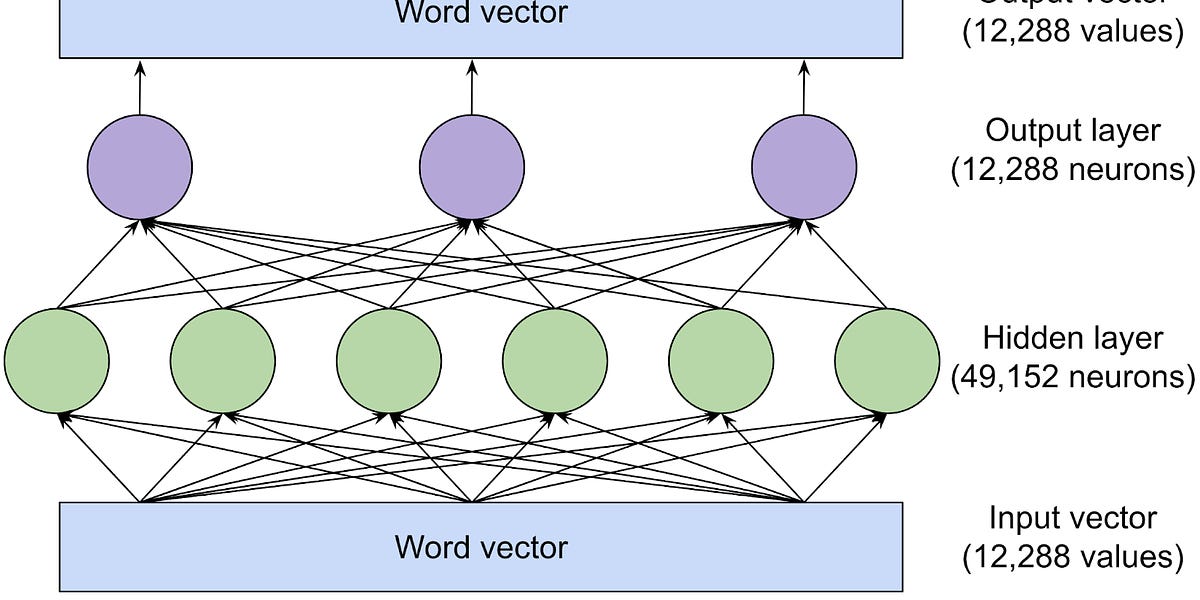

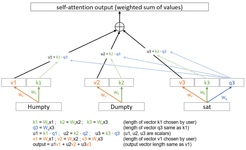

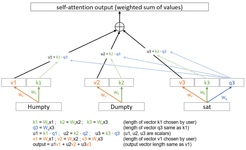

Neural networks learn to predict text by converting words to numbers and finding patterns through attention mechanisms.

So the network turns words into numbers, then use attention to decide what's important for predicting next... See more