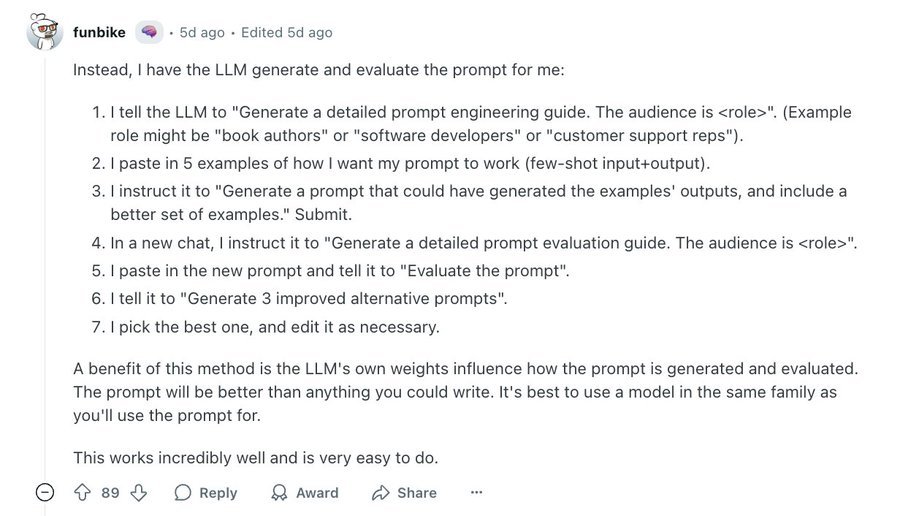

Self-Supervised Prompt Optimization

This guy literally built a prompt that can make any prompt 10x better https://t.co/E84LV2NFIX

This guy literally built a prompt that makes any prompt 10x better. https://t.co/jvXT4w4EQg

Self-Supervised Prompt Optimization