r/LocalLLaMA - Reddit

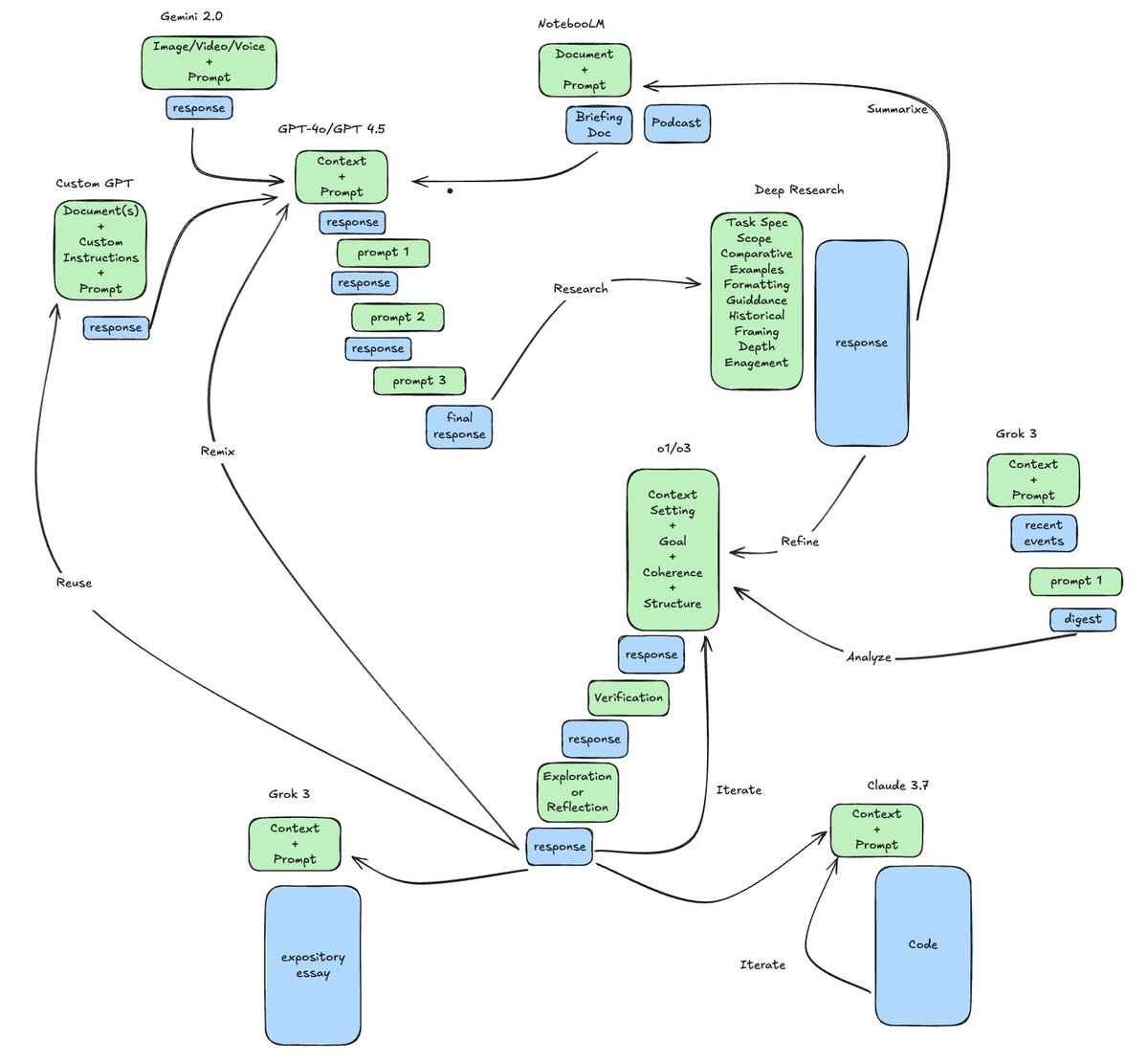

I mined Andrej Karpathy's "How I use LLMs" video for some addition things he does and I've updated the diagram.

Using multiple LLMs as an "LLM council"

Consults multiple LLMs by asking them the same question and synthesizes the responses. For example, when seeking travel recommendations, they ask Gemini,... See more

eneral-purpose models

- 1.1B: TinyDolphin 2.8 1.1B. Takes about ~700MB RAM and tested on my Pi 4 with 2 gigs of RAM. Hallucinates a lot, but works for basic conversation.

- 2.7B: Dolphin 2.6 Phi-2. Takes over ~2GB RAM and tested on my 3GB 32-bit phone via llama.cpp on Termux.

- 7B: Nous Hermes Mistral 7B DPO. Takes about ~4-5GB RAM depending on

.avif)