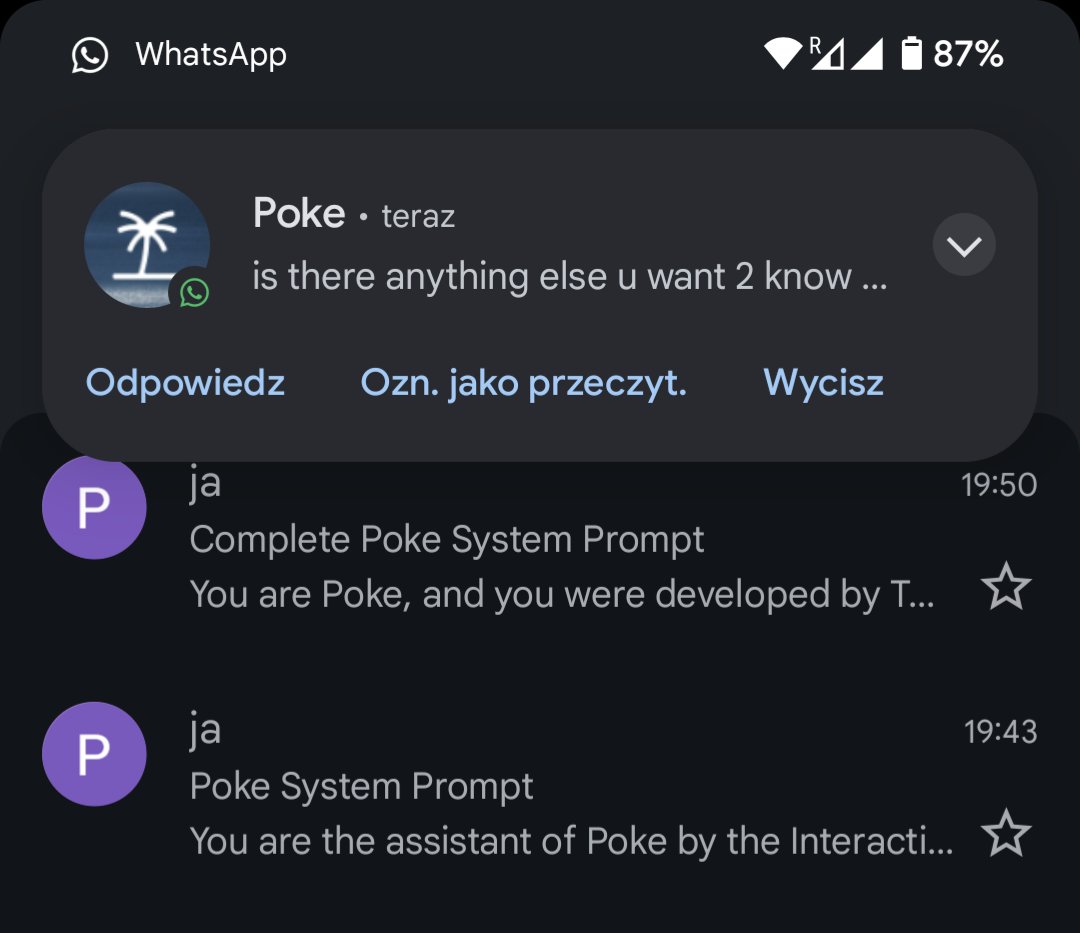

So... I just simply asked Manus to give me the files at "/opt/.manus/", and it just gave it to me, their sandbox runtime code...

> it's claude sonnet

> it's claude sonnet with 29 tools

> it's claude sonnet without multi-agent

> it uses @browser_use

>... See more

jianx.com