NVIDIA Technical Blog | News and tutorials for developers, data ...

Nice paper for a long read across 114 pages.

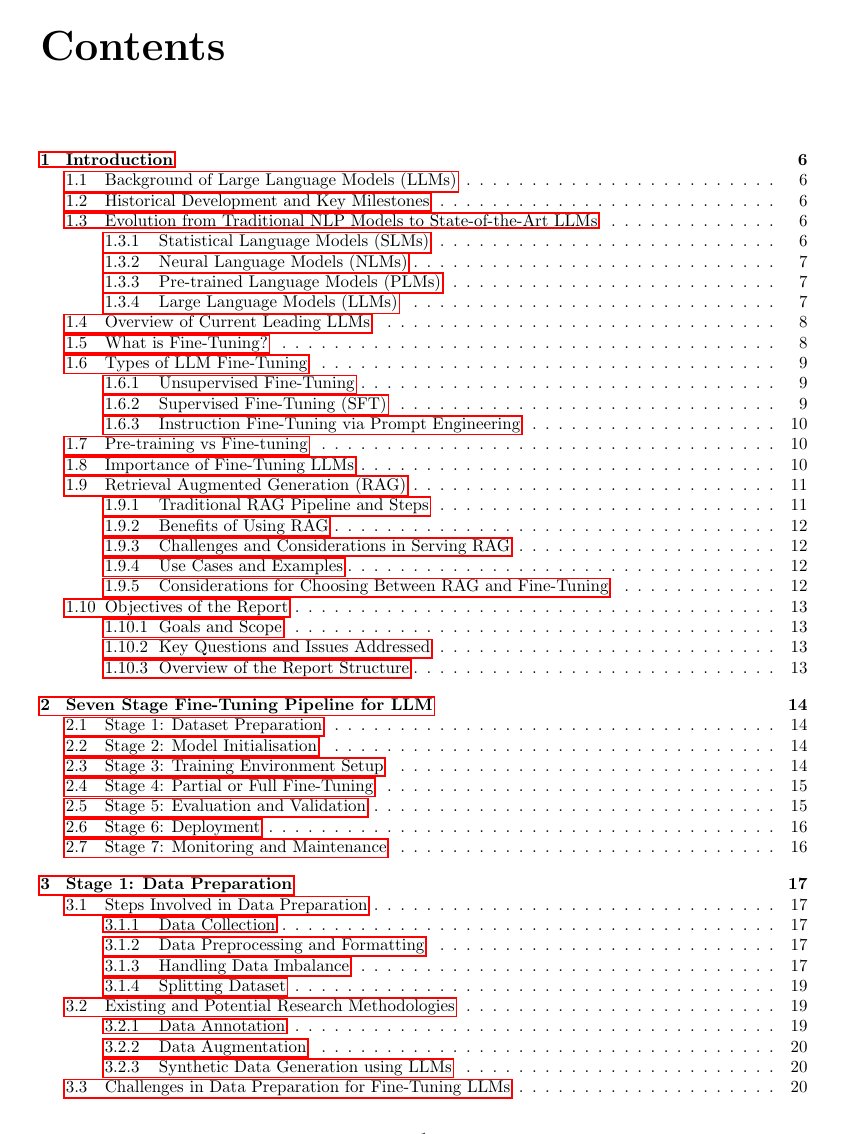

"Ultimate Guide to Fine-Tuning LLMs"

Some of the things they cover

📊 Fine-tuning Pipeline

Outlines a seven-stage process for fine-tuning LLMs, from data preparation to deployment and... See more

How do models represent style, and how can we more precisely extract and steer it?

A commonly requested feature in almost any LLM-based writing application is “I want the AI to respond in my style of writing,” or “I want the AI to adhere to this style guide.” Aside from costly and complicated multi-stage finetuning processes like Anthropic’s RL with... See more

A commonly requested feature in almost any LLM-based writing application is “I want the AI to respond in my style of writing,” or “I want the AI to adhere to this style guide.” Aside from costly and complicated multi-stage finetuning processes like Anthropic’s RL with... See more

Shortwave — rajhesh.panchanadhan@gmail.com [Gmail alternative]

𝗺𝗲𝘁𝗵𝗼𝗱𝘀 𝗼𝗳 𝗳𝗶𝗻𝗲-𝘁𝘂𝗻𝗶𝗻𝗴 𝗮𝗻 𝗼𝗽𝗲𝗻-𝘀𝗼𝘂𝗿𝗰𝗲 𝗟𝗟𝗠 𝗲𝘅𝗶𝘀t ↓

- 𝘊𝘰𝘯𝘵𝘪𝘯𝘶𝘦𝘥 𝘱𝘳𝘦-𝘵𝘳𝘢𝘪𝘯𝘪𝘯𝘨: utilize domain-specific data to apply the same pre-training process (next token prediction) on the pre-trained (base) model

- 𝘐𝘯𝘴𝘵𝘳𝘶𝘤𝘵𝘪𝘰𝘯 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the pre-trained (base) model is fine-tuned on a Q&A dataset to learn to answer questions

- 𝘚𝘪𝘯𝘨𝘭𝘦-𝘵𝘢𝘴𝘬 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the... See more

- 𝘊𝘰𝘯𝘵𝘪𝘯𝘶𝘦𝘥 𝘱𝘳𝘦-𝘵𝘳𝘢𝘪𝘯𝘪𝘯𝘨: utilize domain-specific data to apply the same pre-training process (next token prediction) on the pre-trained (base) model

- 𝘐𝘯𝘴𝘵𝘳𝘶𝘤𝘵𝘪𝘰𝘯 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the pre-trained (base) model is fine-tuned on a Q&A dataset to learn to answer questions

- 𝘚𝘪𝘯𝘨𝘭𝘦-𝘵𝘢𝘴𝘬 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the... See more