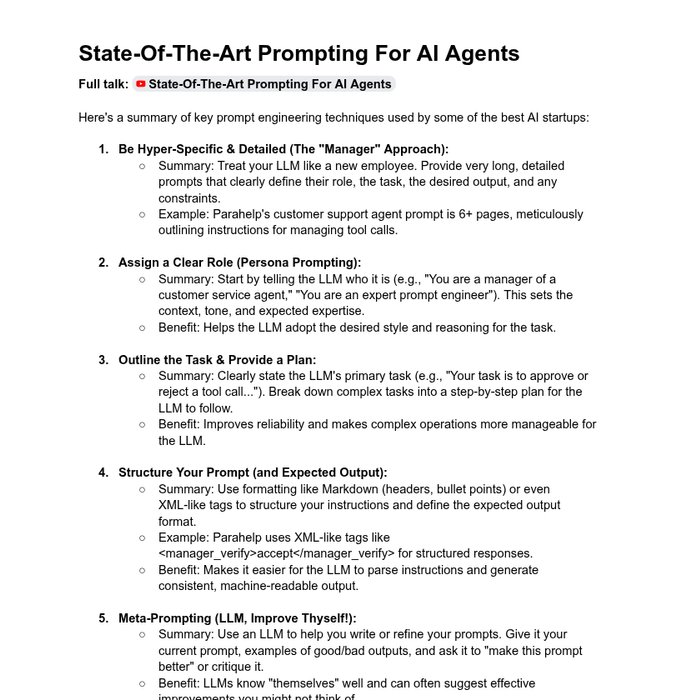

🔥 YC outlines how top AI startups prompt LLMs: prompts exceeding six pages, XML tags, meta-prompts and evaluations as their core IP.

They found meta-prompting and role assignment drive consistent, agent-like behavior.

⚙️ Key Learning

→ Top AI startups use... See more