Improving RAG: Strategies

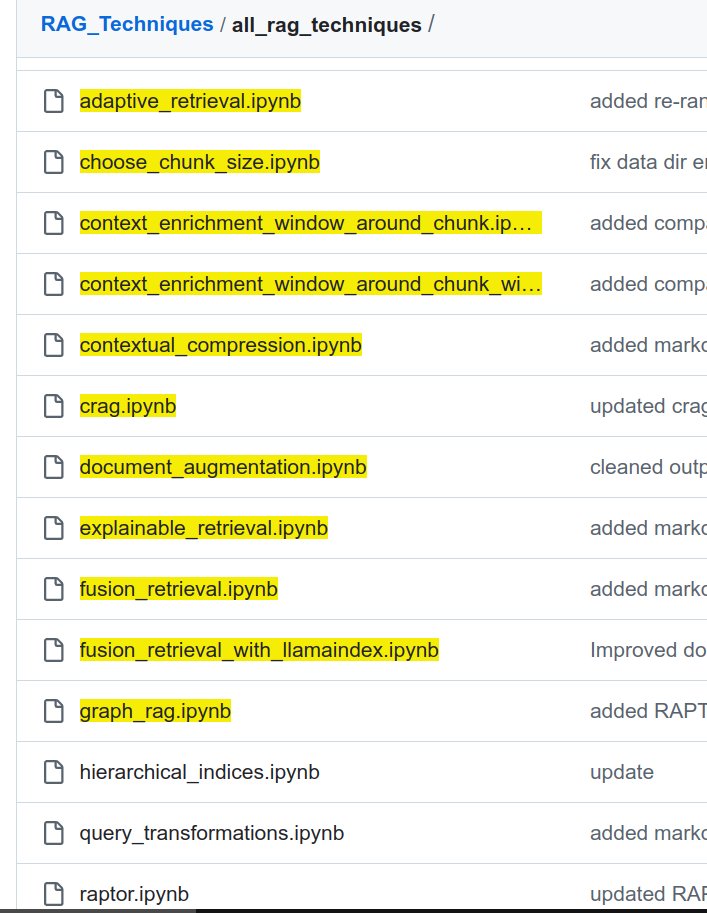

For a collection of advanced Retrieval-Augmented Generation (RAG) techniques this is a very resourceful repo.

Many topics are covered like

- Metadata Filtering: Apply filters based on attributes like date, source, author, or document type.

- Similarity... See more

1. Synthetic Data for Baseline Metrics¶

Synthetic data can be used to establish baseline precision and recall metrics for your reverse search. The simplest kind of synthetic data is to take existing text chunks, generate synthetic questions, and verify that when we query our synthetic questions, the sourced text chunk is retrieved correctly.

Benefi... See more

Synthetic data can be used to establish baseline precision and recall metrics for your reverse search. The simplest kind of synthetic data is to take existing text chunks, generate synthetic questions, and verify that when we query our synthetic questions, the sourced text chunk is retrieved correctly.

Benefi... See more