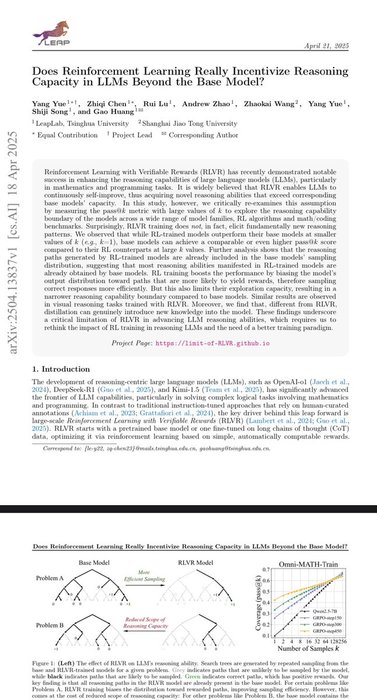

it’s over

turns out the rl victory lap was premature. new tsinghua paper quietly shows the fancy reward loops just squeeze the same tired reasoning paths the base model already knew. pass@1 goes up, sure, but the model’s world actually shrinks. feels like teaching a kid to ace flash cards and calling it... See more