Sublime

An inspiration engine for ideas

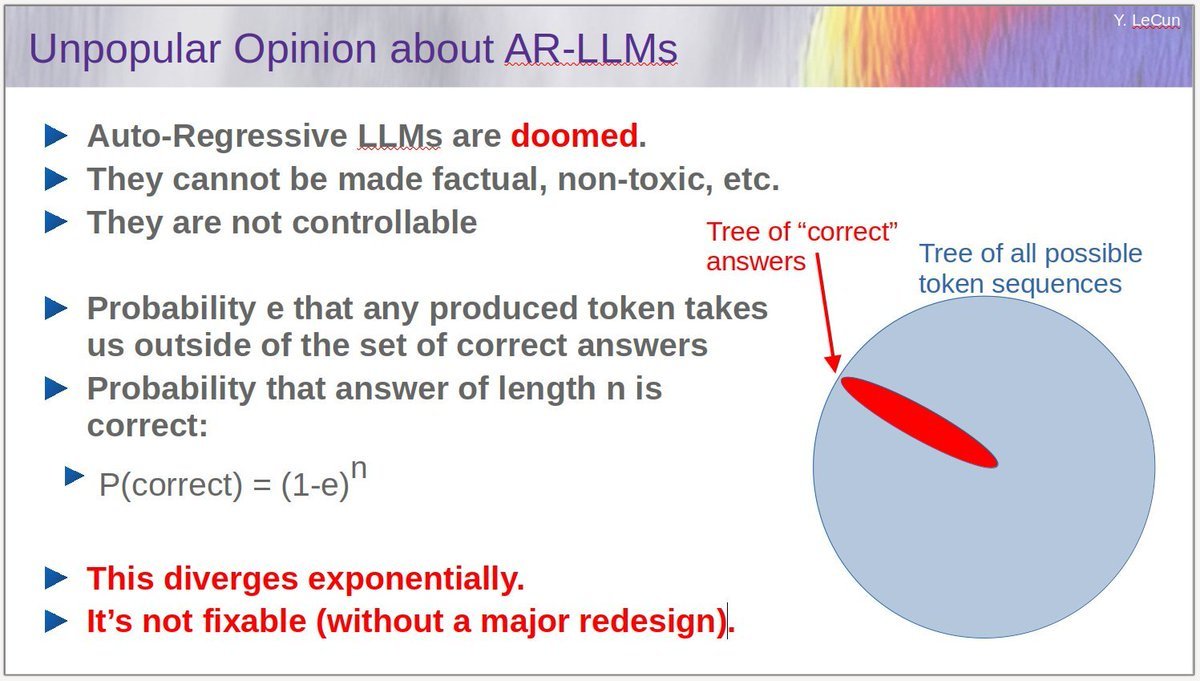

what yann lecun said: "the more tokens an llm generates, the more likely it is to go off the rails and get everything wrong"

what actually happened: "we get extremely high accuracy on arc-agi by generating billions of tokens, the more tokens we throw at it the better it gets" https://t.co/2bUkl4udmK

After seeing a bunch of people get mad at this editorial proposing a "Progress Studies" field, I've concluded that the critics are wrong; an interdisciplinary program aimed at figuring out how to boost the rate of scientific discovery would have value.

https://t.co/Jkt5F937Es

Noah Smith 🐇x.comJust a moment...

marginalrevolution.com

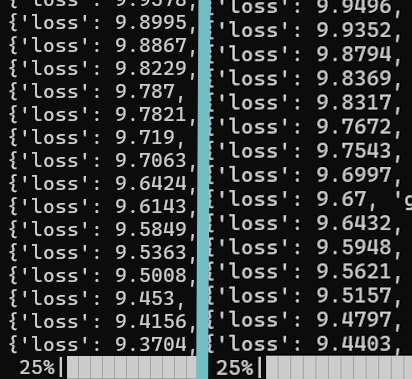

today i designed an alternative to SwiGLU for MLP layers, and tried doing some test training on a 500m model

was able to get better results with a couple million test tokens, but probably needs some scaling to *really* test it

maybe @Yuchenj_UW would be interested? 👀 https://t.co/MpK4ppRDTW

A question: "How much money do you *actually* need for an ARPA to be successful?" from @Policy_Exchange ARPA essay.

The implicit assumption is 'a lot!' - but why?

If you could lower the number you could try several models at... See more

Ben Reinhardtx.comBasically, there are always a lot of problems in the world, and the media is always happy to deliver them right to your doorstep in order to drive engagement. And so there’s a natural tendency to read the news and think that the world is going to Hell in a handbasket. But these problems aren’t all related, and in fact sometimes some of them help... See more

Noah Smith • At Least Five Interesting Things: Will of the Masses Edition

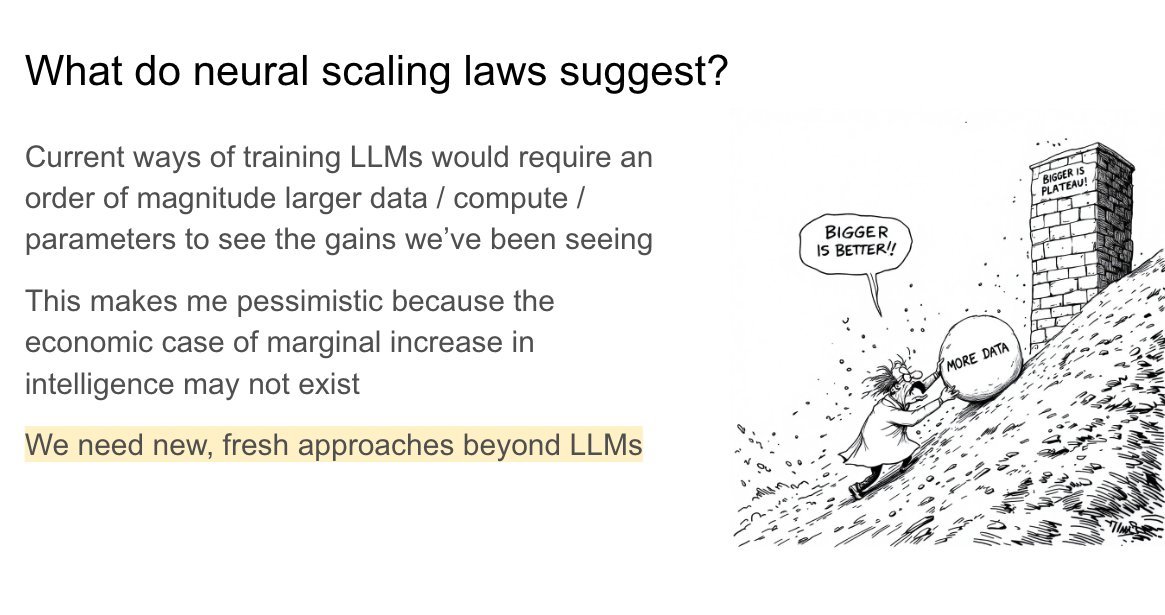

For the last month, I've been working on Project 🫐 Blueberry that explores reasoning in LLMs.

Today, I'm wrapping the project.

My learnings from it in slides below 👇 https://t.co/XpjDrCBpsB

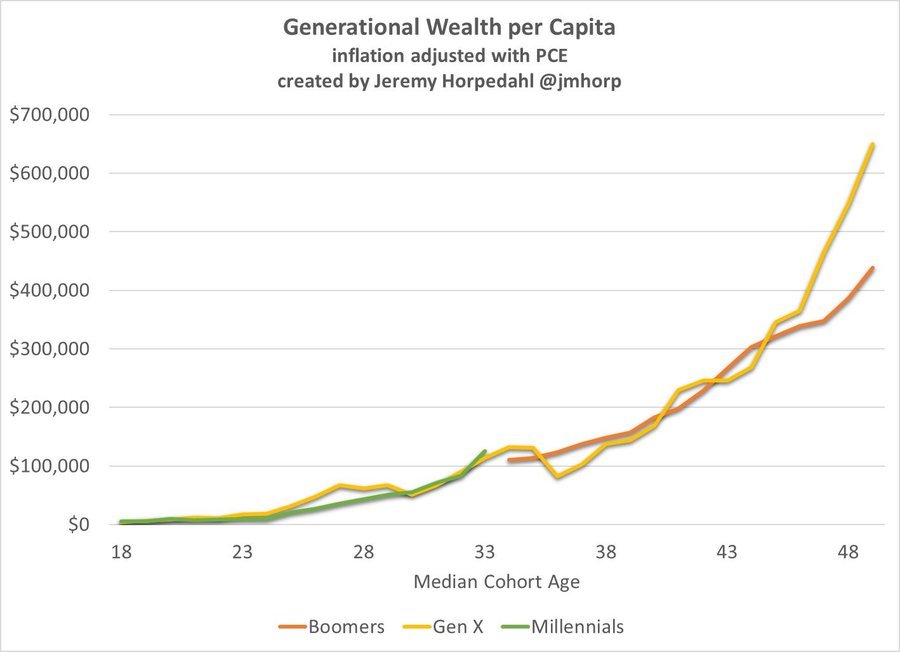

Millennials are on exactly the same wealth trajectory that Gen X were on at the same age (adjusted for inflation). And Gen X are wealthier than the Boomers were. https://t.co/mPKZWldl2q