Sublime

An inspiration engine for ideas

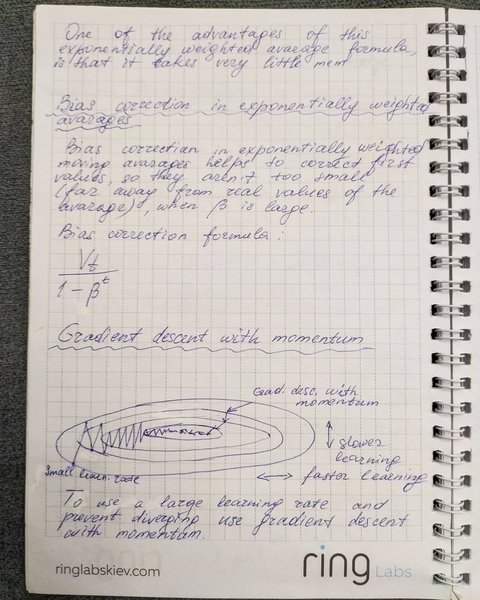

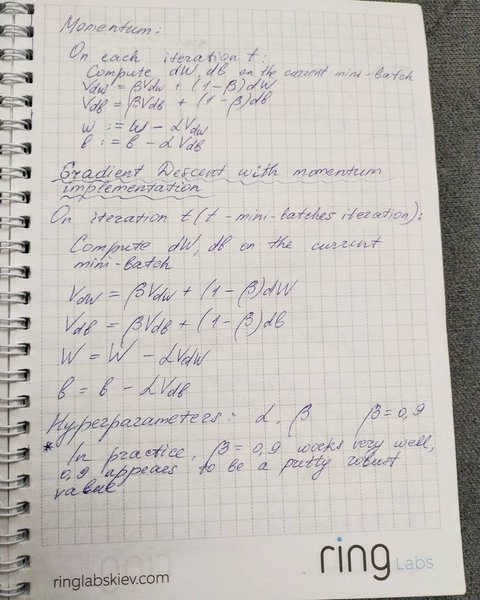

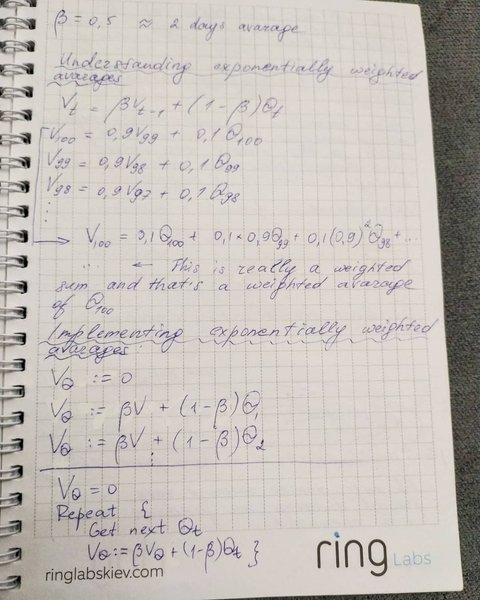

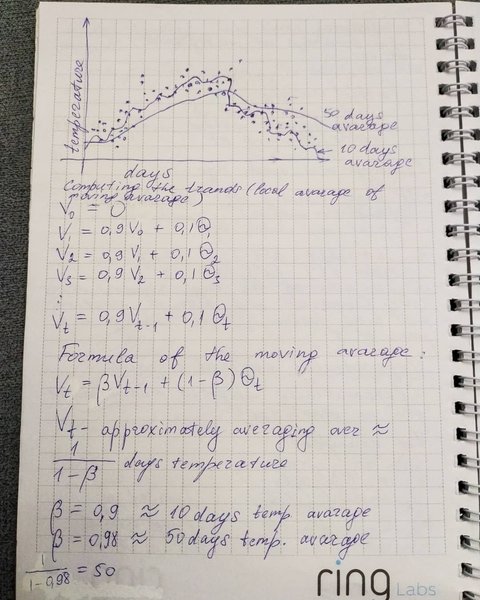

Today's topic is gradient descent with momentum. Gradient descent with momentum almost always works faster than the standard gradient descent algorithm. Momentum technique was bored from the physics. Imagine a ball in a hilly terrain is trying to reach the deepest valley. When the slope of the hill is very high, the ball gains a lot of momentum and... See more

instagram.comPeople say that change is the only constant but calculus would beg to differ. Animation connecting stationary points with f’(x) = 0. #math #calculus #maxima #minima #stationarypoints

matholicisminstagram.comGaussians

gestalt.ink

Principal Component Analysis 4 Dummies: Eigenvectors, Eigenvalues and Dimension Reduction

georgemdallas.wordpress.com

Super simple explanation of Markov Chains (without the bulls*it).

Exactly how I like learning about this stuff: https://t.co/GJfOOB2LOl

The best way to explain the answer may be to start with a slightly wrong version, and then fix it.

Paul Graham: Essays • What You (Want To)* Want

the exponential increase that occurs when you combine even a small number of possibilities.

Stanislas Dehaene • How We Learn: Why Brains Learn Better Than Any Machine . . . for Now

Triangles and circles were easy to measure, but slightly more irregular curves like the parabola were beyond the ken of the Greek mathematicians of the day.

Charles Seife • Zero

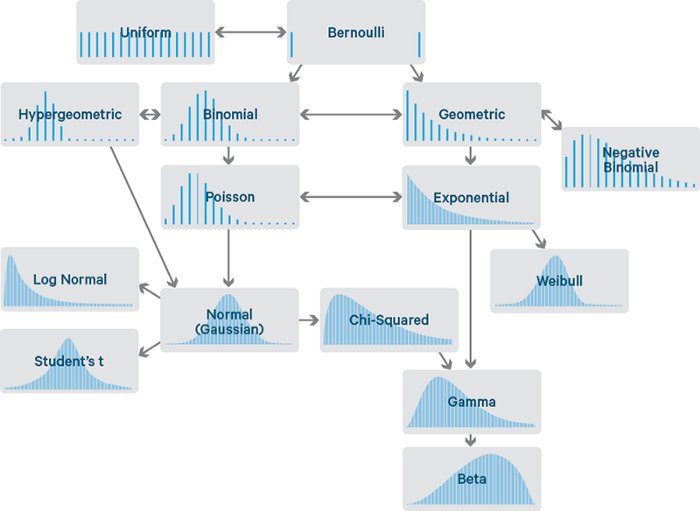

Understanding probability is essential in data science.

In 4 minutes, I'll demolish your confusion.

Let's go! https://t.co/X0BQRGrdZl