Sublime

An inspiration engine for ideas

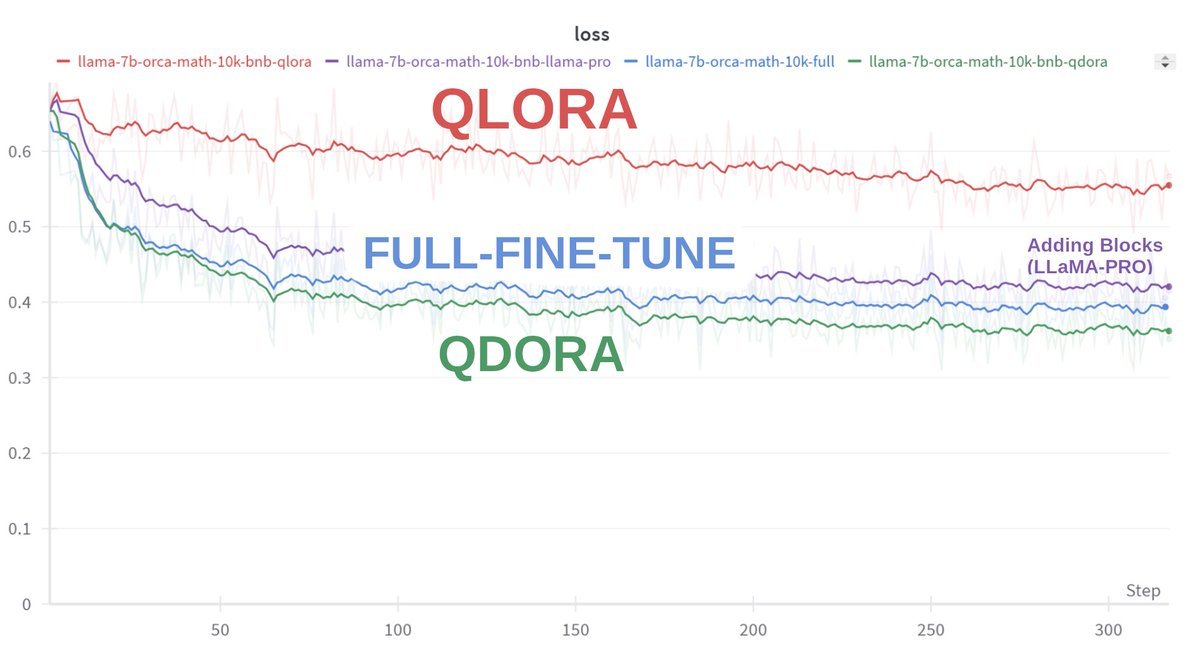

Wake up babe new SOTA quantization method just dropped!!!!

TEQ (Trainable Equivalent Transformation) preserves FP32 precision of the model output while using low-precision quantization. Training requires only 1K steps and < 1% of the original model’s trainable parameters.

Results are on par... See more

Harper Carrollx.comdeep-tech

Juan Orbea and • 33 cards

Quantum

Ashmini Perera • 1 card

Linear scaling achieved with multiple DeepSeek v3.1 instances. 4x macs = 4x throughput.

2x M3 Ultra Mac Studios = 1x DeepSeek @ 14 tok/sec

4x M3 Ultra Mac Studios = 2x DeepSeek @ 28 tok/sec

DeepSeek V3.1 is a 671B parameter model - so at its native 8-bit... See more

Matt Betonx.comeXmY: A Data Type and Technique for Arbitrary Bit Precision Quantization

https://t.co/NyV4BNPfBO

arXiv Dailyx.comUsing light as a neural network, as this viral video depicts, is actually closer than you think. In 5-10yrs, we could have matrix multiplications in constant time O(1) with 95% less energy. This is the next era of Moore's Law.

Let's talk about Silicon Photonics...

1/9 https://t.co/vn9K47xDvU

Deedyx.com