Sublime

An inspiration engine for ideas

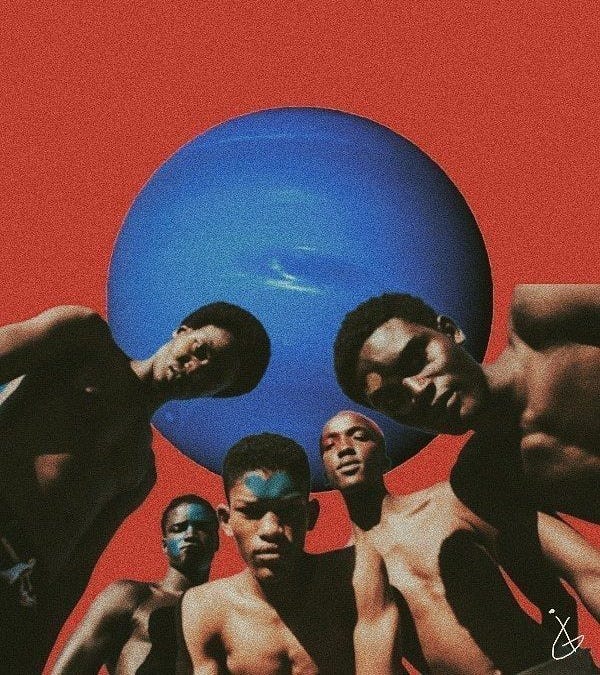

‘We are all insects,’ he said to Miss Ephreikian. ‘Groping towards something terrible or divine.

Philip K. Dick • The Man in the High Castle (Penguin Modern Classics)

Claude's Constitution

anthropic.com

An inspiration engine for ideas

‘We are all insects,’ he said to Miss Ephreikian. ‘Groping towards something terrible or divine.