Sublime

An inspiration engine for ideas

NVIDIA just brought DeepSeek-R1 671-bn param model to NVIDIA NIM microservice on build.nvidia .com

- The DeepSeek-R1 NIM microservice can deliver up to 3,872 tokens per second on a single NVIDIA HGX H200 system.

- Using NVIDIA Hopper architecture, DeepSeek-R1 can deliver high-speed... See more

here's my hyperdev-1 setup, an elite American software developer agent (proudly running in Texas!):

- rented a H100

- installed Claude Code

- used it to install Qwen3-Coder-480B

- and created an agent in Claude which uses this local 480B model

.. connected to Github, Vercel, few... See more

Varunx.comQwen3-Coder is now available on Cerebras, 17x faster than on GPU providers.

And it's completely free.

Try it out directly in your developer flow, or signup for our virtual hackathon tomorrow. It's a $5,000 prize :)

@CerebrasSystems @cline... See more

Sarah Chiengx.com

Nomad is a portable edge AI workstation built on Nvidia’s Blackwell architecture. It delivers 6700 teraflops of AI processing as a local inference engine for LLMs and other models.

instagram.com

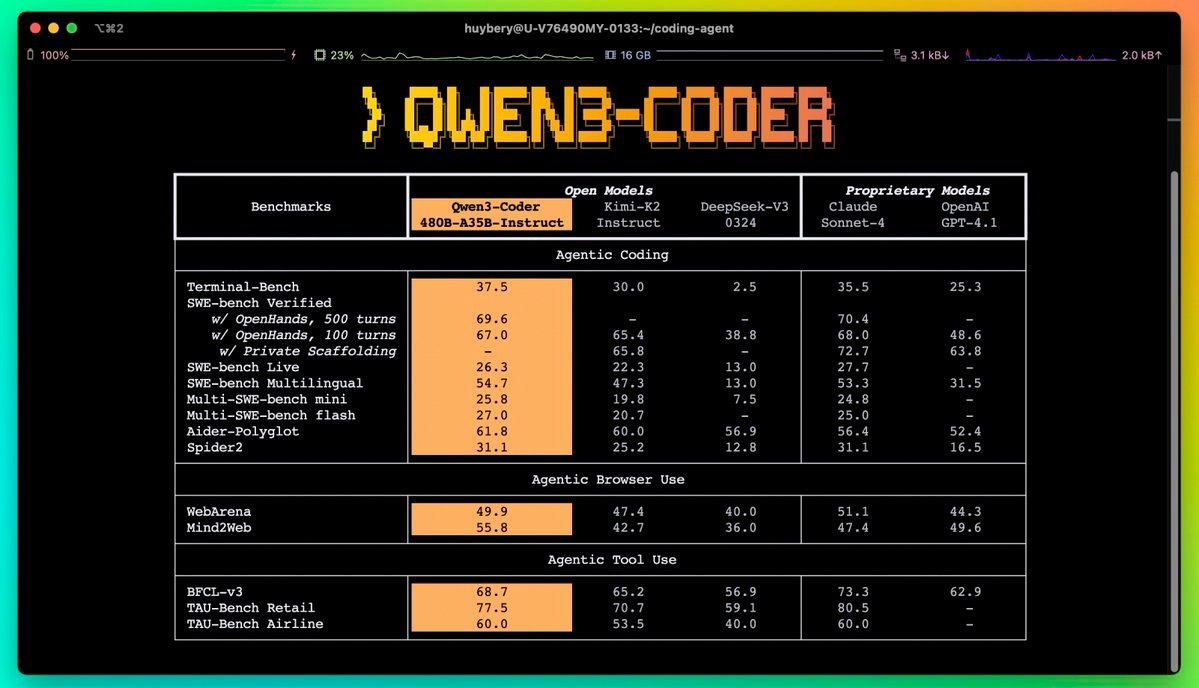

>>> Qwen3-Coder is here! ✅

We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves top-tier performance across multiple agentic... See more

While Apple has been positioning M4 chips for local AI inference with their unified memory architecture, NVIDIA just undercut them massively.

Stacking Project Digits personal computers is now the most affordable way to run frontier LLMs locally.

The 1 petaflop headline feels like marketing... See more