Sublime

An inspiration engine for ideas

In 2016, at an AI conference in NYC, I explained artificial consciousness, world models, predictive coding, and science as data compression in less than 10 minutes. I happened to be in town, walked in without being announced, and ended up on their panel. It was great fun.

Organizer:... See more

Jürgen Schmidhuberx.comA #deeplearning history lesson with Jürgen Schmidhuber.

Who is this mystery researcher he is referring to? 🤔 (answer at the end)

Full talk (free registration): https://t.co/ngS1jX7Z9e https://t.co/KvK8havBTH

Kosta Derpanisx.com

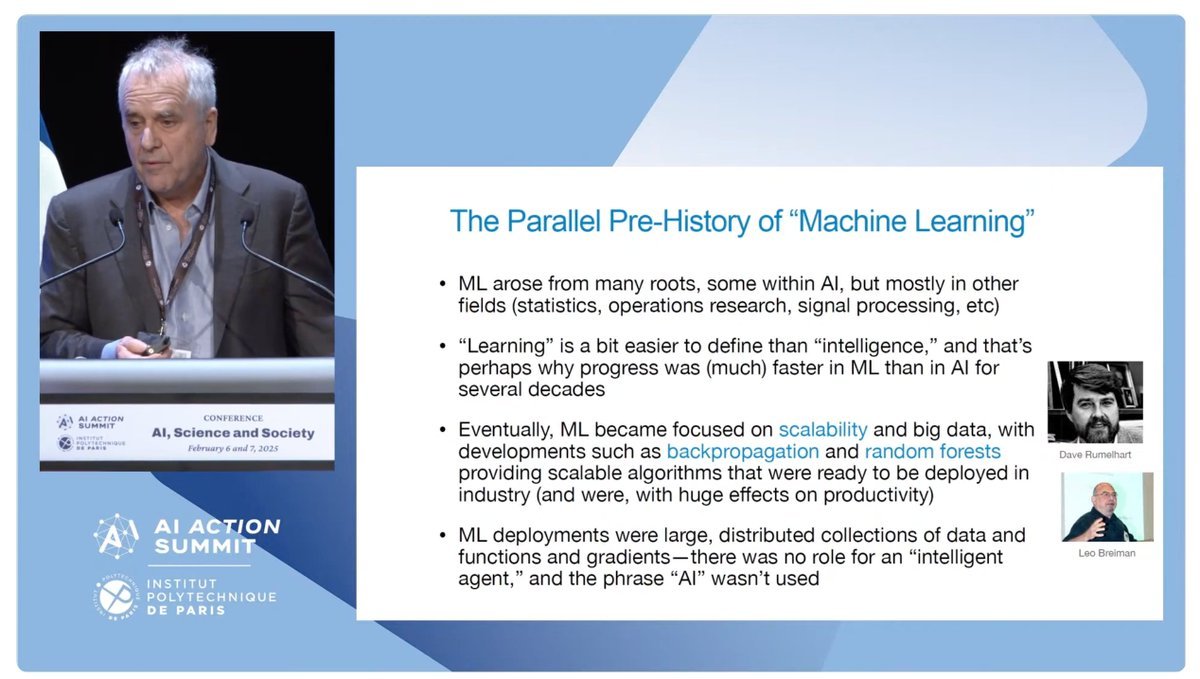

Michael Jordan gave a short, excellent, and provocative talk recently in Paris - here's a few key ideas

- It's all just machine learning (ML) - the AI moniker is hype

- The late Dave Rumelhart should've received a Nobel prize for his early ideas on making backprop... See more

Re: The (true) story of the "attention" operator ... that introduced the Transformer ... by @karpathy. Not quite! The nomenclature has changed, but in 1991, there was already what is now called an unnormalized linear Transformer with "linearized self-attention" [TR5-6]. See (Eq. 5) of [FWP0,1] and the ICML 2021 paper which emphasised the... See more

Jürgen Schmidhuberx.com

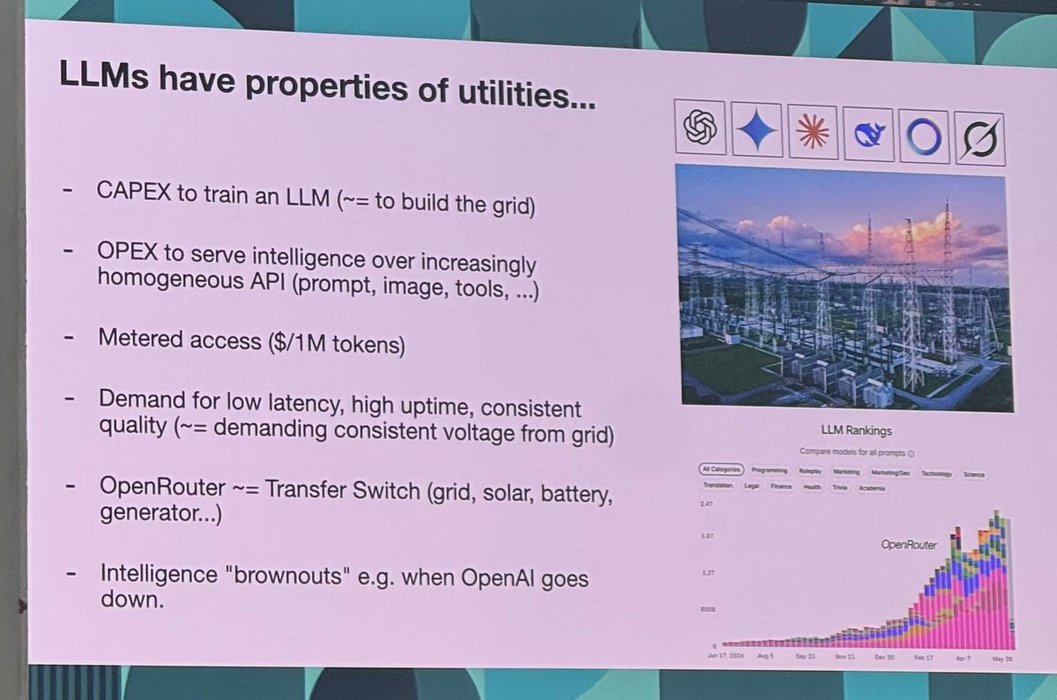

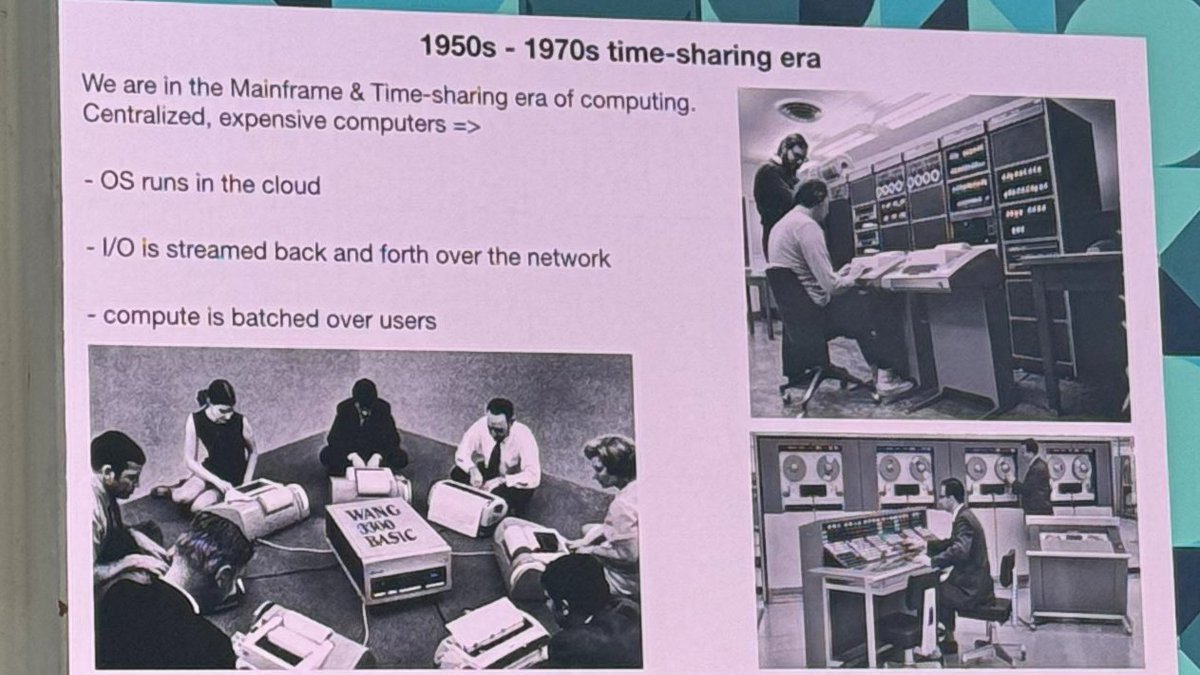

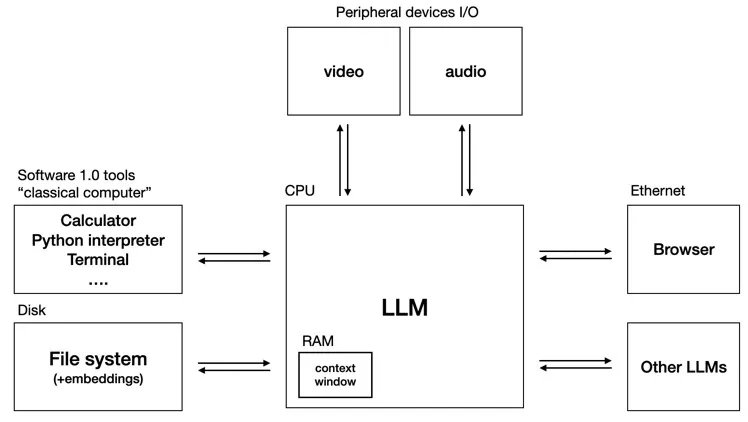

a quick note from @karpathy talk, which afterwards I was fully immersed in the talk I stopped writing down/taking notes:

While it is interesting to think about LLMs in terms of "electricity" -> CAPEX to train an LLM (~= to build the grid) -> OPEX to serve intelligence over increasingly homogeneous API (prompt, image,... See more

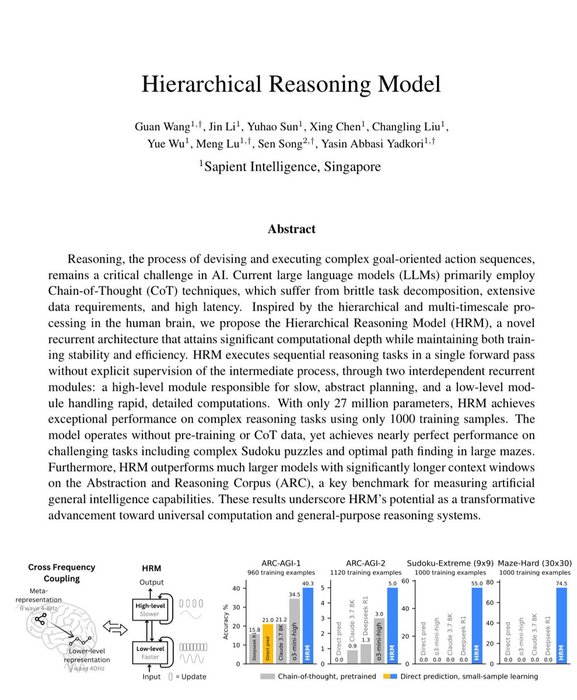

Researchers designed a 27M model inspired by the brain. It outperforms o3-mini, R1, and others.

This makes every single AI lab look silly!

Here are their 5 core techniques:

- Brain-inspired hierarchical processing, temporal separation, and recurrent connectivity

-... See more

Your butler doesn't need a PHD... but he does need to live in your house.

Why small ‘dumb’ models win, and expensive big models cost a lot, and get paid very little (just like real PHDs) https://t.co/TXBicAixrX