Sublime

An inspiration engine for ideas

NVIDIA presents Upcycling Large Language Models into Mixture of Experts

Finds that upcycling outperforms continued

dense model training based on large-scale experiments using Nemotron-4 15B trained on 1T tokens

https://t.co/lKEtbMeQX8 https://t.co/L4LiEKrWDm

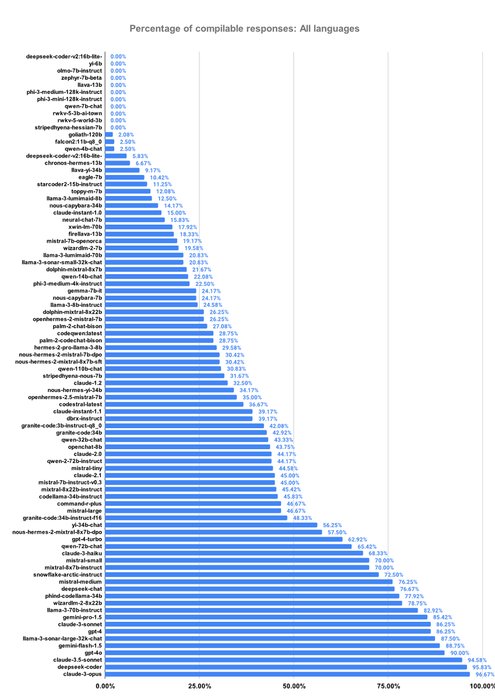

DeepSeek-v2-Coder is really so impressive.

This blog did a great work on checkin 180+ LLMs on code writing quality.

There are only 3 models (Anthropic Claude 3 Opus, DeepSeek-v2-Coder, GPT-4o) that had 100% compilable Java code, while no model had 100% for... See more

Talks from the Open-Source Generative AI workshop.

Ying Sheng - Bridging Human and LLM Systems @ying11231

Ying talks about SGLang, https://t.co/kDkZSjAnCZ, (which is my personal favorite LLM frontend language.)

https://t.co/H674XM2K6a

Sasha Rushx.comGrok 3 with reasoning is *really good*.

It just one-shotted a difficult code task that involved modifying GRPO rewards (highly unlikely @xai trained on this as it's very recent).

o1 pro / o3 mini got this wrong.

Really impressive to see Grok generalizing!

Matt Shumerx.com

1/ Trying to signal boost w a short 🧵

Amateur Go player Kellin Pelrine can consistently beat "KataGo", an AI system that was once classified as "strongly superhuman".

Strikingly, the strategies employed to beat the AI do not foil other amateur players.

https://t.co/2AgK3gKVYP... See more