Sublime

An inspiration engine for ideas

ExLlamaV2

ExLlamaV2 is an inference library for running local LLMs on modern consumer GPUs.

Overview of differences compared to V1

ExLlamaV2 is an inference library for running local LLMs on modern consumer GPUs.

Overview of differences compared to V1

- Faster, better kernels

- Cleaner and more versatile codebase

- Support for a new quant format (see below)

turboderp • GitHub - turboderp/exllamav2: A fast inference library for running LLMs locally on modern consumer-class GPUs

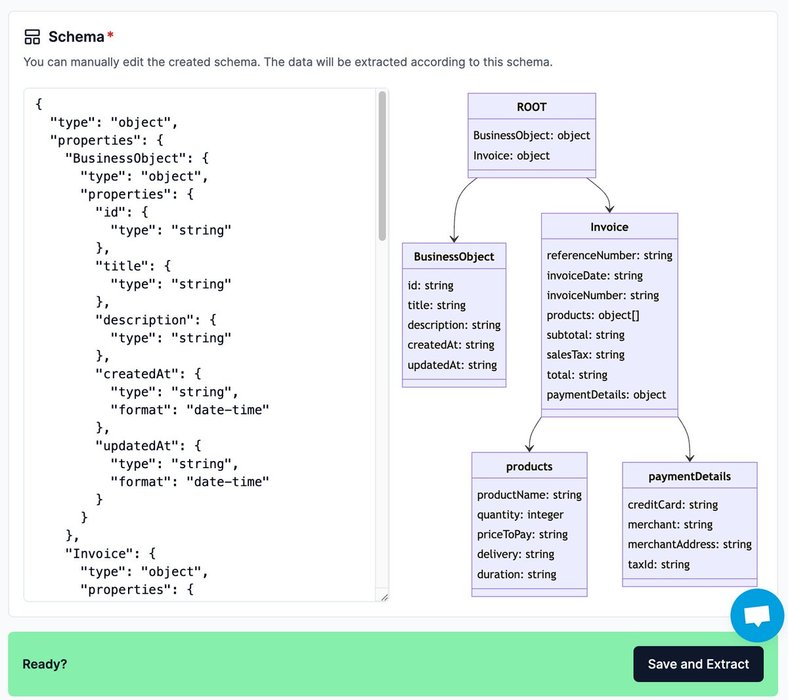

Today we’re excited to introduce an early preview of LlamaExtract 🦙🔬, a managed service that lets you perform structured data extraction from unstructured documents.

1. Infer a human-editable schema from a candidate set of documents.

2. Given this schema, extract structured values from new... See more

How to Create Llama3 RAG Application using PhiData? (PDF Compatible)

📜 PDF Data Handling

🔗 URL Data

🌐 @GroqInc & @ollama Integration (100% Private)

⚙️ Automatic Embedding

🗄️ Save in @pgvector Database

🌍 @streamlit User Interface

🔄... See more

Mervin Praisonx.com.@Drakulaapp tech stack

- Base @base (chain)

- Zora @zora (mint)

- Privy @privy_io (auth)

- CB Smart Wallet @coinbase (wallet)

- Pimlico @pimlicoHQ (paymaster)

- Codex @trycodex (price data)

- Splits @0xSplits (fee splitting)

- OnchainKit... See more

Alex Masmejx.com

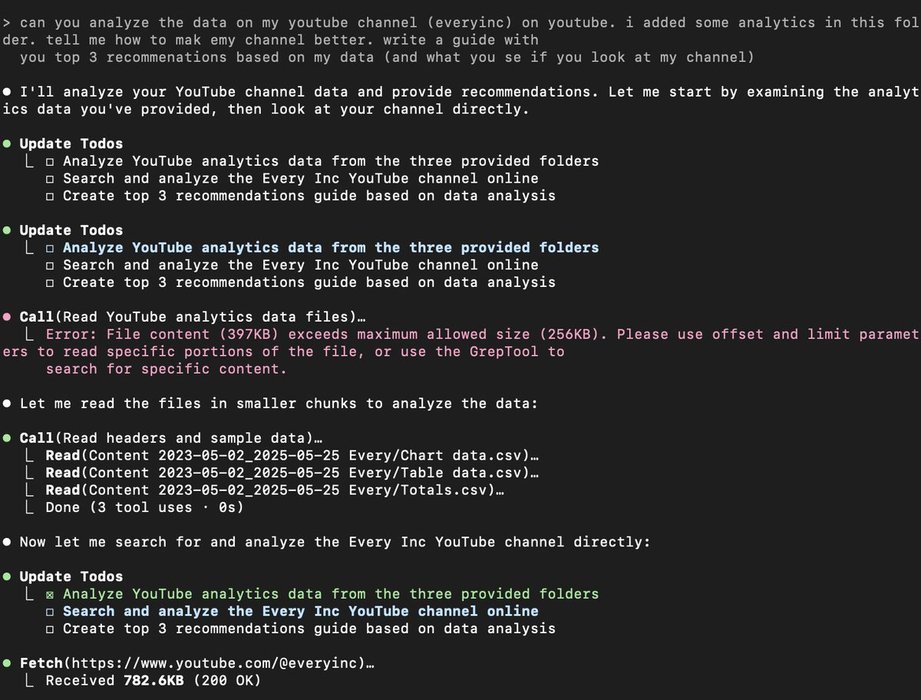

Claude Code with 4 Opus is INSANE

here it is agnatically analyzing all of my YouTube data to create a custom YouTube strategy for me: https://t.co/vS7JaAke1I