Sublime

An inspiration engine for ideas

I came across something odd: FLOPs are reported differently depending on whom you ask!

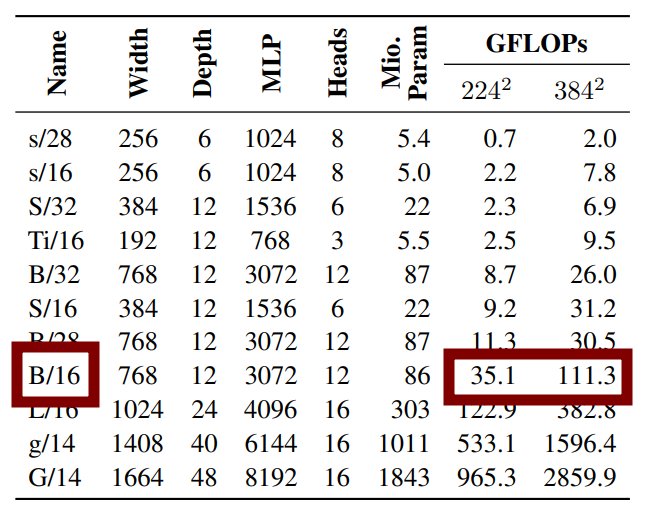

Left: numbers from "Scaling ViT" paper, as reported by XLA (jax). Let's focus on the two B/16 numbers.

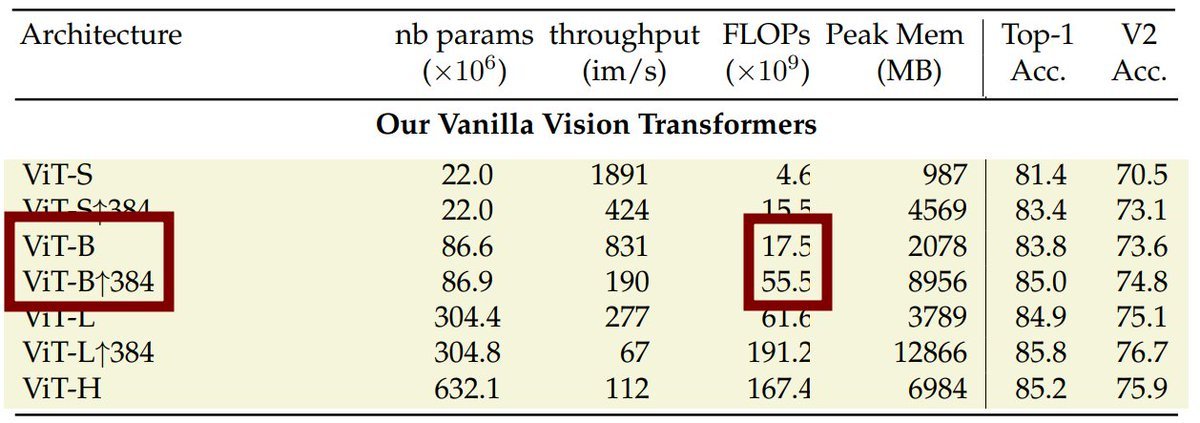

Right: numbers from "DeiT III" paper for that exact same model are _very_ different!

1/N... See more

The naive napkin math would go something like

1 brain ~= 1e11 neurons * 1e4 synapses * 1e1 fires/s = 1e16 FLOPS (i.e. 10 petaflops)

NVIDIA A100 = 312e12 peak FLOPS, in-practice achievable utilization may be let’s say 50%, i.e. 156e12. Dividing you get 1 brain ~= 1e16 / 156e12 = 64 A100 GPUs.... See more

Andrej Karpathyx.comflop

Mickey Galvin • 2 cards

High Finance

Diego Segura • 22 cards

Brain Rot

Cyrus Chen • 4 cards