Sublime

An inspiration engine for ideas

“We fought the slop, and the slop became us. Or, some of us. But the slop isn’t going anywhere, and the question now is how to learn about the world online without becoming a conduit for slop. A slop creator. A slop enjoyer. Is it even possible?”

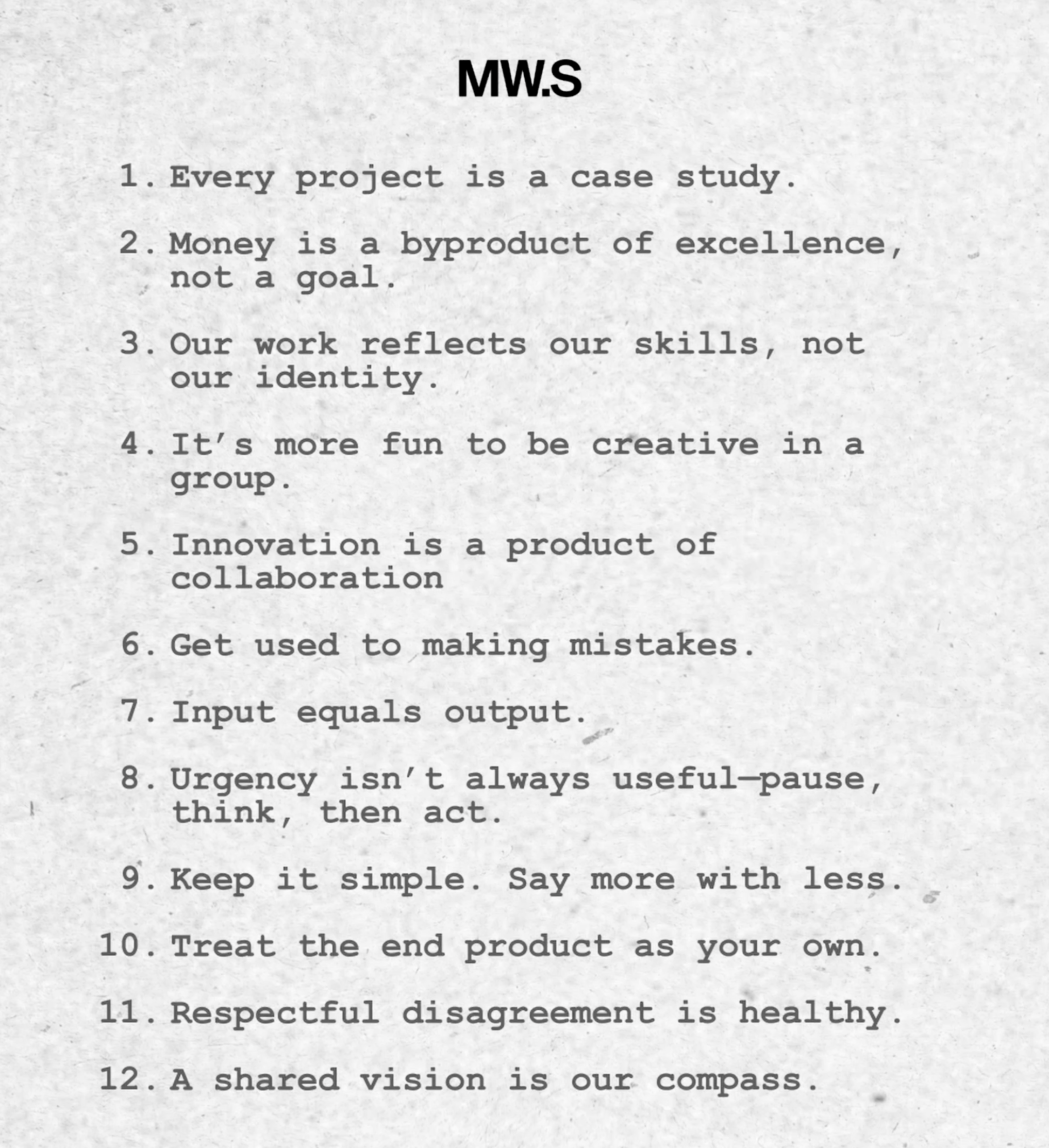

How Mouthwash Studio make creative decisions across the multi-media landscape | Alex Tan

youtube.com

Using written affirmations and scheduled self-messages to deliberately reshape identity, reinforce insights, and leverage rituals’ repetition

TRANSCRIPT

I was terrified of public speaking until I just sat down for a week and every day I spent 10 minutes just writing that I like public speaking. I love public speaking. You wrote that down? I know, yes. And now you love it? Yeah, a week later.

and I knew it's not, this works when you know what you're doing, right? Like you don't even, it's like, it's

... See moreThe hard part of computer programming isn’t expressing what we want the machine to do in code. The hard part is turning human thinking – with all its wooliness and ambiguity and contradictions – into computational thinking that is logically precise and unambiguous, and that can then be expressed formally in the syntax of a programming language.