Sublime

An inspiration engine for ideas

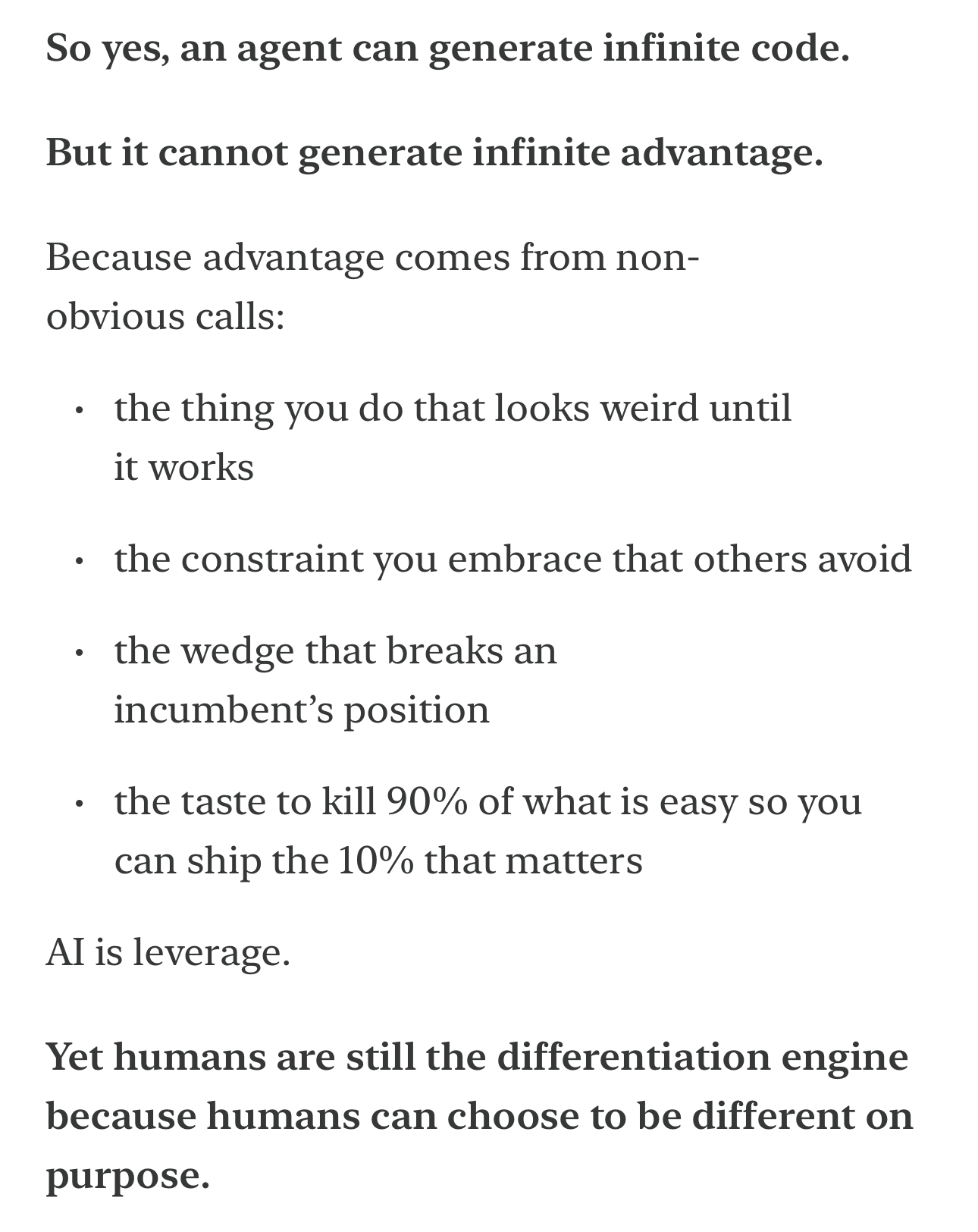

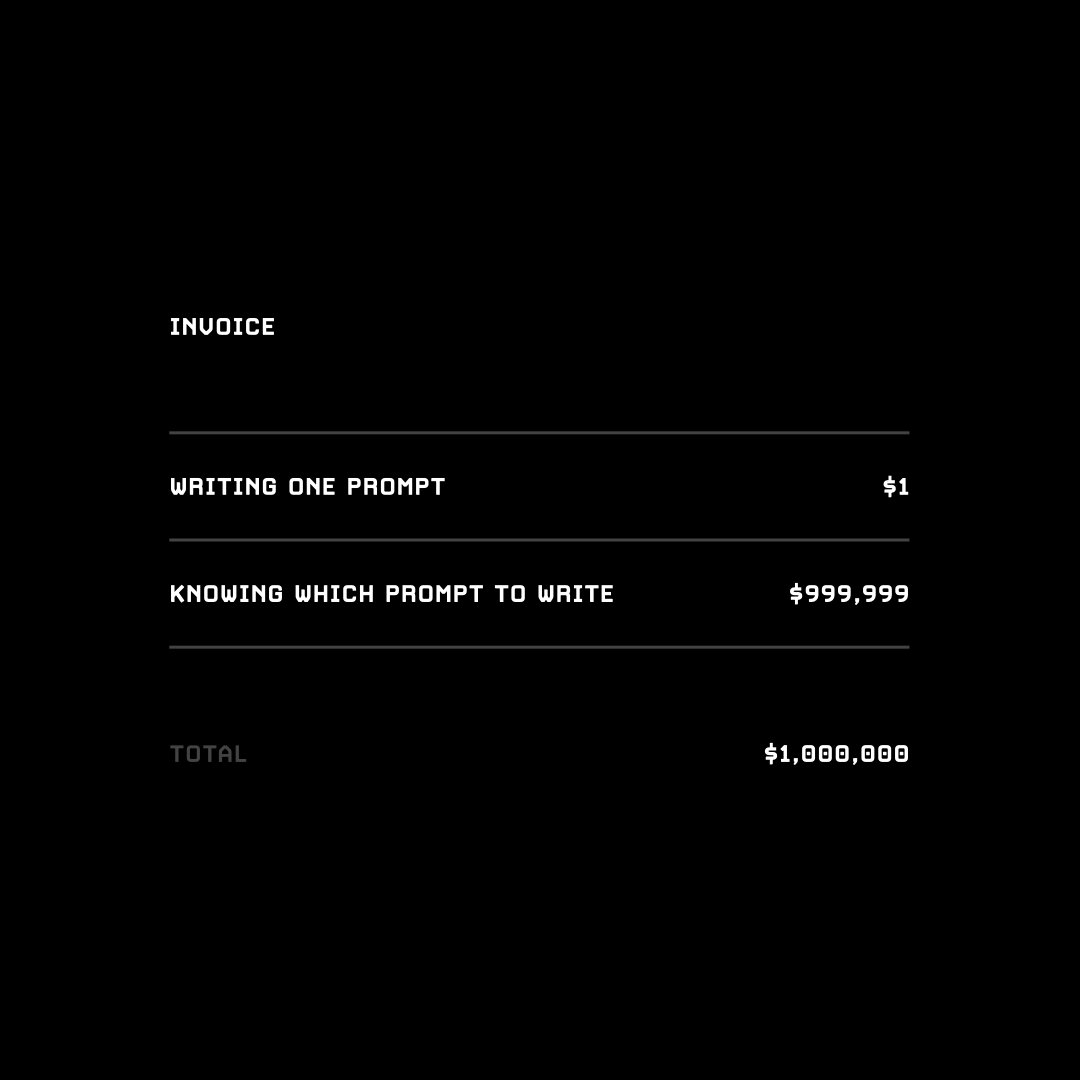

Preference for small, comprehensible businesses; valuing craftsmanship, limits, anti-optimization, and the philosophy of “enough.”

TRANSCRIPT

I feel like they're more real. I like real things, for whatever that means. Define it however you'd like. But a billion-dollar business or a ten-billion-dollar business, just it doesn't feel like a real thing to me. It feels like a concept.

Meanwhile, the dry cleaner down the street, I can drop off my shirt. I get it cleaned. I bring it up. I pick

... See more

Time and again in creative work, and in life in general, I find that what gets me unstuck is a psychological ‘move’, a shift of orientation, that I’ve only ever been able to describe using the word unclenching. Which is arguably unfortunate, but anyway: it seems to be my default response to uncertainty or overwhelm or other difficulties to tighten... See more

Oliver Burkeman • Oliver Burkeman (@oliverburkeman)

If everyone is busy making everything,

how can anyone perfect anything?

We start to confuse convenience with joy.

Abundance with choice.

Designing something requires focus.

The first thing we ask is:

What do we want people to feel?

Delight.

Surprise.

Love.

Connection.

Then we begin to craft around our intention.

It takes time.

There are a thousand no’s for... See more

how can anyone perfect anything?

We start to confuse convenience with joy.

Abundance with choice.

Designing something requires focus.

The first thing we ask is:

What do we want people to feel?

Delight.

Surprise.

Love.

Connection.

Then we begin to craft around our intention.

It takes time.

There are a thousand no’s for... See more

Apple, “Intention” | Insights and Inspiration from 50+ Great Examples