Sublime

An inspiration engine for ideas

DeepSeek (Chinese AI co) making it look easy today with an open weights release of a frontier-grade LLM trained on a joke of a budget (2048 GPUs for 2 months, $6M).

For reference, this level of capability is supposed to require clusters of closer to 16K GPUs, the ones being brought up today are more around 100K GPUs.... See more

Andrej Karpathyx.com

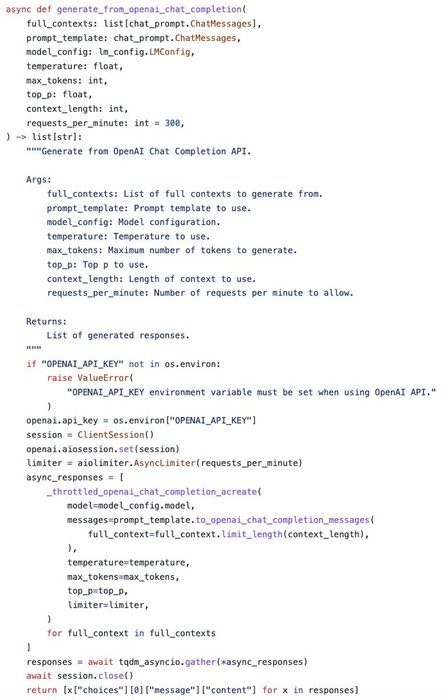

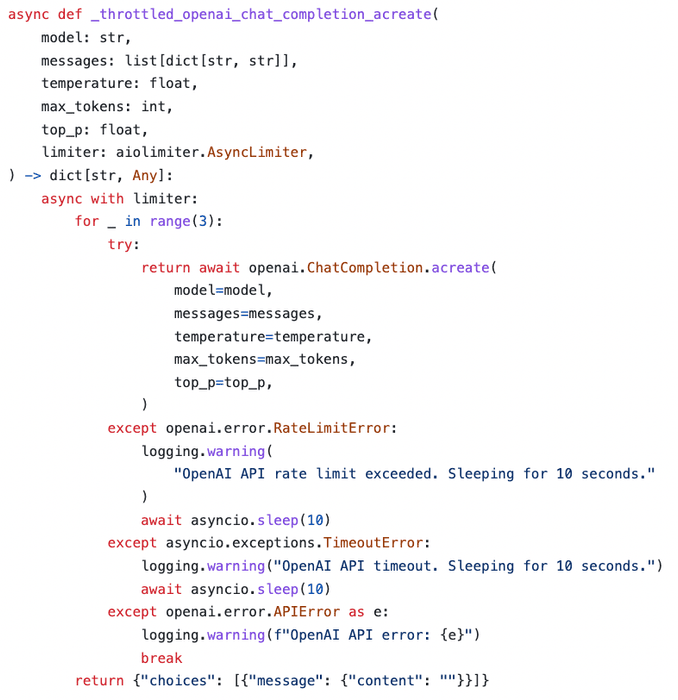

I wrote a more efficient/robust OpenAI querying wrapper:

1. Parallel execution with adjustable rate limits

2. Automatic retries on failure

3. Interface to Huggingface/Cohere for comparison

This finished a 33k completions in ≈1... See more

Possibly the fastest new model to launch on OpenRouter - introducing GLM-4.5 from a new model lab, @Zai_org !

Family of powerful, balanced models punching very high for their weight.

Reasoning can be toggled on and off via API. See 👇 for more

OpenRouterx.comAs someone who has dabbled with this a lot some lessons:

1. If you can fit your model and optimizer on 1 gpu then use ddp. Use grad accumulation to increase batch size as needed.

2. If 1 doesn't work then try using an 8 bit optimizer via bitsandbytes. (Praise be @Tim_Dettmers). On 80GB GPUs, 7B params... See more

Raj Dabrex.com

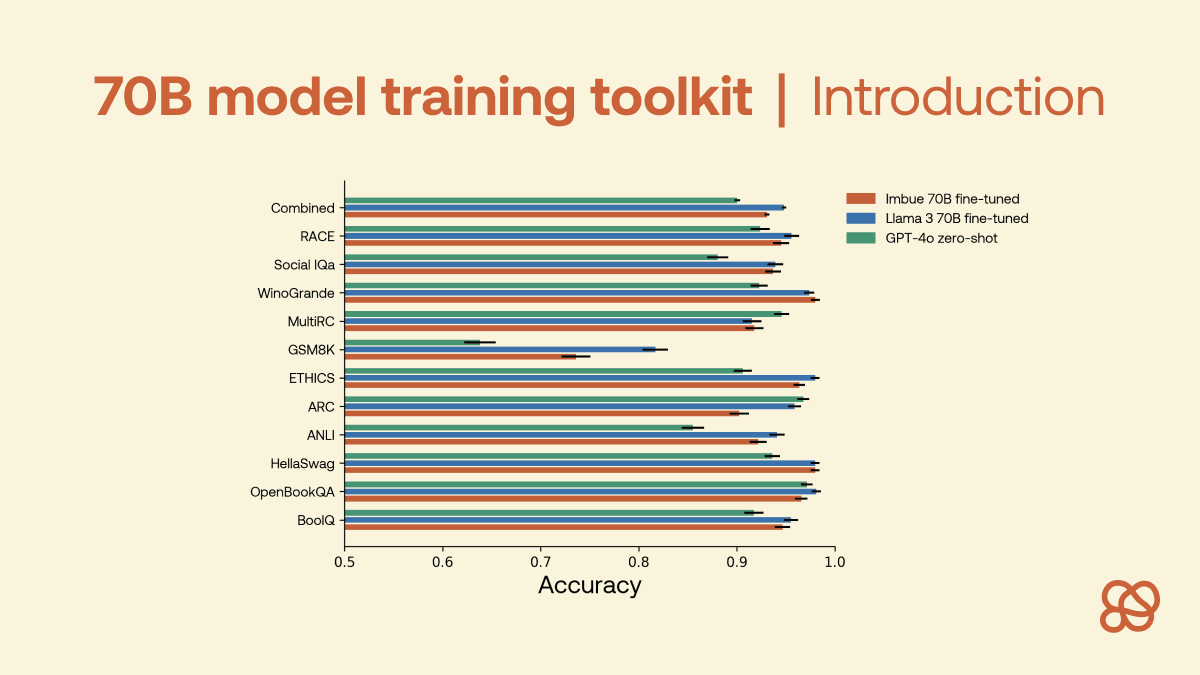

Early this year, we trained a 70B model optimized for reasoning and coding. This model roughly matches LLAMA 3 70B despite being trained on 7x less data.

Today, we’re releasing a toolkit to help others do the same, including:

• 11 sanitized and extended NLP reasoning benchmarks including... See more