Sublime

An inspiration engine for ideas

I didn’t care about being well known. I cared about being known well.

Reframing planning as a path to a deeper, less anxious life rather than capitalist over‑productivity and chaos.

TRANSCRIPT

And the sort of ideal anti-productivity vision that was being pushed, starting with Odell and then lots of commentators during the pandemic, was really what you should be doing is just in an unstructured way, walking through fields and watching birds and uncommodifying your life. And that this was the tension between commodifying your time and

... See moreAutomation’s impact on employment, historical fears, job creation, unpredictability of new roles, and transition speed in labor markets.

TRANSCRIPT

Did it? Right? A lot of people carrying buckets of water and, you know, lighting lamps and all those kinds of things. And this was the concern with factories as well, right? Yeah, absolutely. Everything. Literally every single thing that comes along. Even the printing press, right? Absolutely.

And what it does is it frees people up for new creative

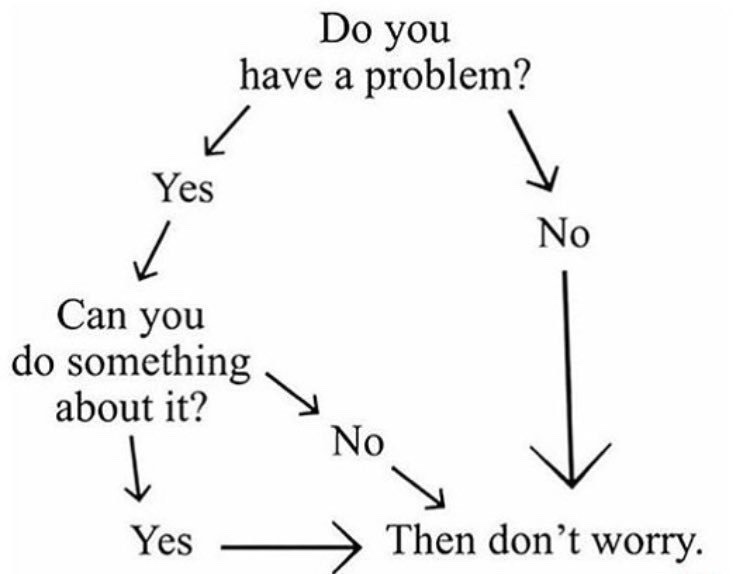

... See moreThe test of the machine is the satisfaction it gives you. There isn't any other test. If the machine produces tranquility it's right. If it disturbs you it's wrong until either the machine or your mind is changed.