Sublime

An inspiration engine for ideas

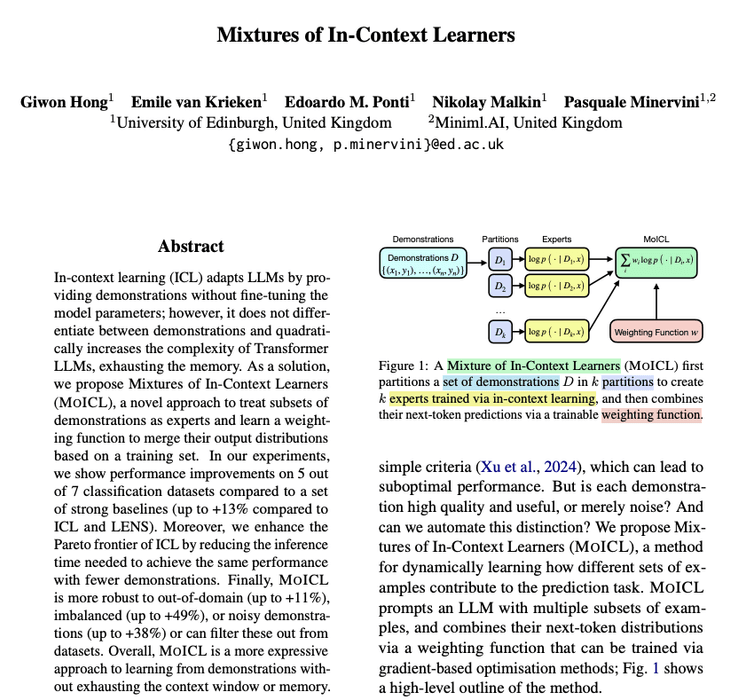

Mixture of In-Context Learners

Uses subsets of demonstrations to train experts via in-context learning. Given a training set, a trainable weighting function is used to combine the experts' next-token predictions.

This approach applies to black-box LLMs since access to the internal parameters... See more

MIT 6.S191 (2020): Neurosymbolic AI

youtube.comAI Toolbox

Dave King • 12 cards

AI Coding

Aleksei • 3 cards

AI workflows

Dave King • 2 cards

AI courses

Dave King • 4 cards

AI & ML

Andrea Badia • 2 cards

AI & ML

Luc Cheung • 2 cards