Sublime

An inspiration engine for ideas

This is from Apple's State of the Union

The local model is a 3B parameter SLM that uses adapters trained for each specific feature. Diffusion model does the same thing, adapter for each style.

Anything running locally or Apple's Secure Cloud is an Apple model, not OpenAI.... See more

Max Weinbachx.comSo Apple has introduced a new system called “Private Cloud Compute” that allows your phone to offload complex (typically AI) tasks to specialized secure devices in the cloud. I’m still trying to work out what I think about this. So here’s a thread. 1/

Matthew Green is on BlueSkyx.comReally fascinating discussion around Magnificent Seven, AI, and investing in AI by Gavin Baker, Antonio Gracias, and Bill Gurley. Leaving my notes here:

"...what scaling laws say is if you wanna double the performance of an algorithm, you need to train it on ten more compute and data. So the reason this turbo charges... See more

Mostly Borrowed Ideasx.comThere are some interesting optimizations to consider when running retrieval at scale (in @cursor_ai's case, hundreds of thousands of codebases)

For example, reranking 500K tokens per query

With blob-storage KV-caching and pipelining, it's possible to make this 20x cheaper (1/8)

Aman Sangerx.comThe Anthropic Economic Index

anthropic.com

While Apple has been positioning M4 chips for local AI inference with their unified memory architecture, NVIDIA just undercut them massively.

Stacking Project Digits personal computers is now the most affordable way to run frontier LLMs locally.

The 1 petaflop headline feels like marketing... See more

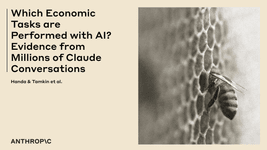

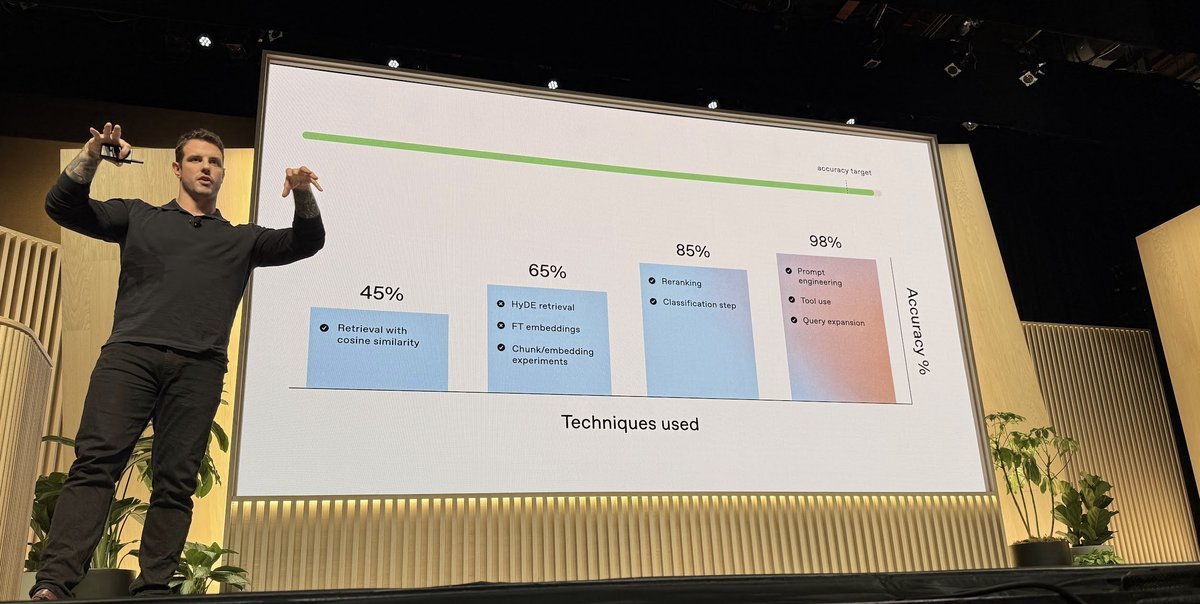

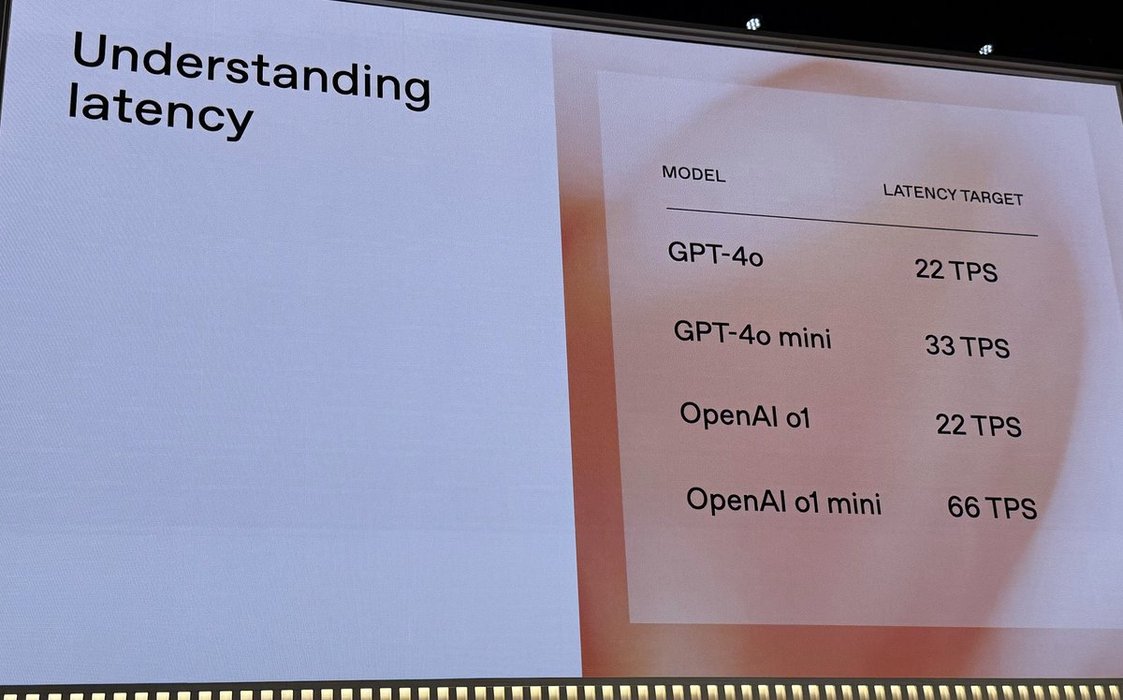

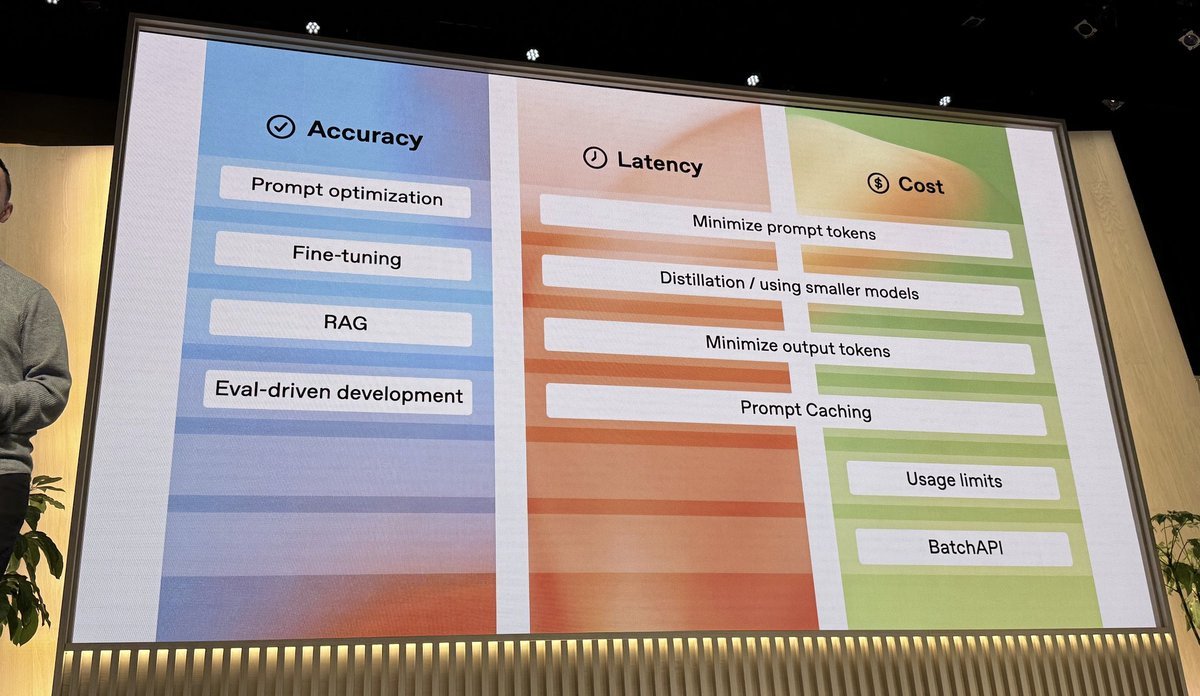

Scaling LLM apps - OpenAI break out session

Going from 1K > 10M users

Making apps better, faster, and cheaper

Accuracy

* Start by optimizing for accuracy, use the most intelligent model you have

* Build evals > Set Target > Optimize

*... See more