Sublime

An inspiration engine for ideas

today we're releasing a new small model (0.5B) for detecting problems with tool usage in agents, trained on 50M tokens from publicly available MCP server tools

it's great at picking up on tool accuracy issues and outperforms larger models https://t.co/MdWY42JQ7D

Freddie Vargusx.comAI NEWS: Meta just unexpectedly dropped Llama 3.3—a 70B model that's ~25x cheaper than GPT-4o.

Plus, Google released a new Gemini model, OpenAI reinforcement finetuning, xAI's Grok is available for free, Copilot Vision, ElevenLabs GenFM, and more.

Here's what you need to know:

Rowan Cheungx.com

BillionMail just dropped SERIOUS AI firepower for email ops.

Not your average mail merge.

🧠 /prompts → polished templates.

🌐 Auto-scrapes target sites.

⚡ Works with OpenAI, Grok, Gemini, Anthropic.

✅ 200+ fixes.

✅ Zero ... See more

Meet Jan-nano, a 4B model that outscores DeepSeek-v3-671B using MCP.

It's built on Qwen3-4B with DAPO fine-tuning, it handles:

- real-time web search

- deep research

Model + GGUF: https://t.co/i8KSXcDhA9

To... See more

Menlo Researchx.compair-preference-model-LLaMA3-8B by RLHFlow: Really strong reward model, trained to take in two inputs at once, which is the top open reward model on RewardBench (beating one of Cohere’s).

DeepSeek-V2 by deepseek-ai (21B active, 236B total param.): Another strong MoE base model from the DeepSeek team. Some people are questioning the very high MMLU sc... See more

DeepSeek-V2 by deepseek-ai (21B active, 236B total param.): Another strong MoE base model from the DeepSeek team. Some people are questioning the very high MMLU sc... See more

Shortwave — rajhesh.panchanadhan@gmail.com [Gmail alternative]

💥 A 450M model just beat bigger VLAs on real robot tasks, and it’s 100% open source

[📍 bookmark for later]

Came across SmolVLA, a new vision-language-action model for robotics that’s compact, fast, and trained entirely on open community datasets from LeRobot via Hugging Face.

___LINEBREAK__... See more

Ilir Aliu - eu/accx.com

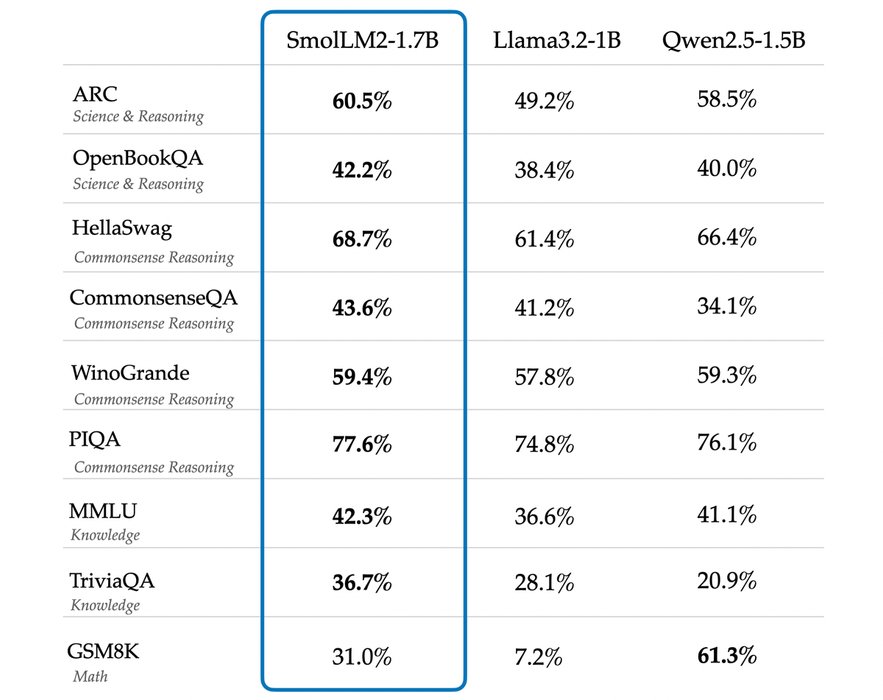

Introducing SmolLM2: the new, best, and open 1B-parameter language model.

We trained smol models on up to 11T tokens of meticulously curated datasets. Fully open-source Apache 2.0 and we will release all the datasets and training scripts! https://t.co/m8PhVjqUzq

An independent evaluation using the Mensa Norway matrix‐reasoning exam placed OpenAI’s o3 model at an IQ of 136. This score is higher than roughly 98% of the human population . The seven‐run rolling average was logged by TrackingAI, which monitors commercial and open‐source systems on standardized cognitive tests.