Isabelle Levent

@isabellelevent

Isabelle Levent

@isabellelevent

Tech Ethics and

Crawford elaborated in an interview. “When you have this enchanted determinism, you say, we can’t possibly understand this. And we can’t possibly regulate it when it’s clearly so unknown and such a black box,” she says. “And that’s a trap.”

_ZVO8V02.jpg.1400x1400.jpg)

Poetry and

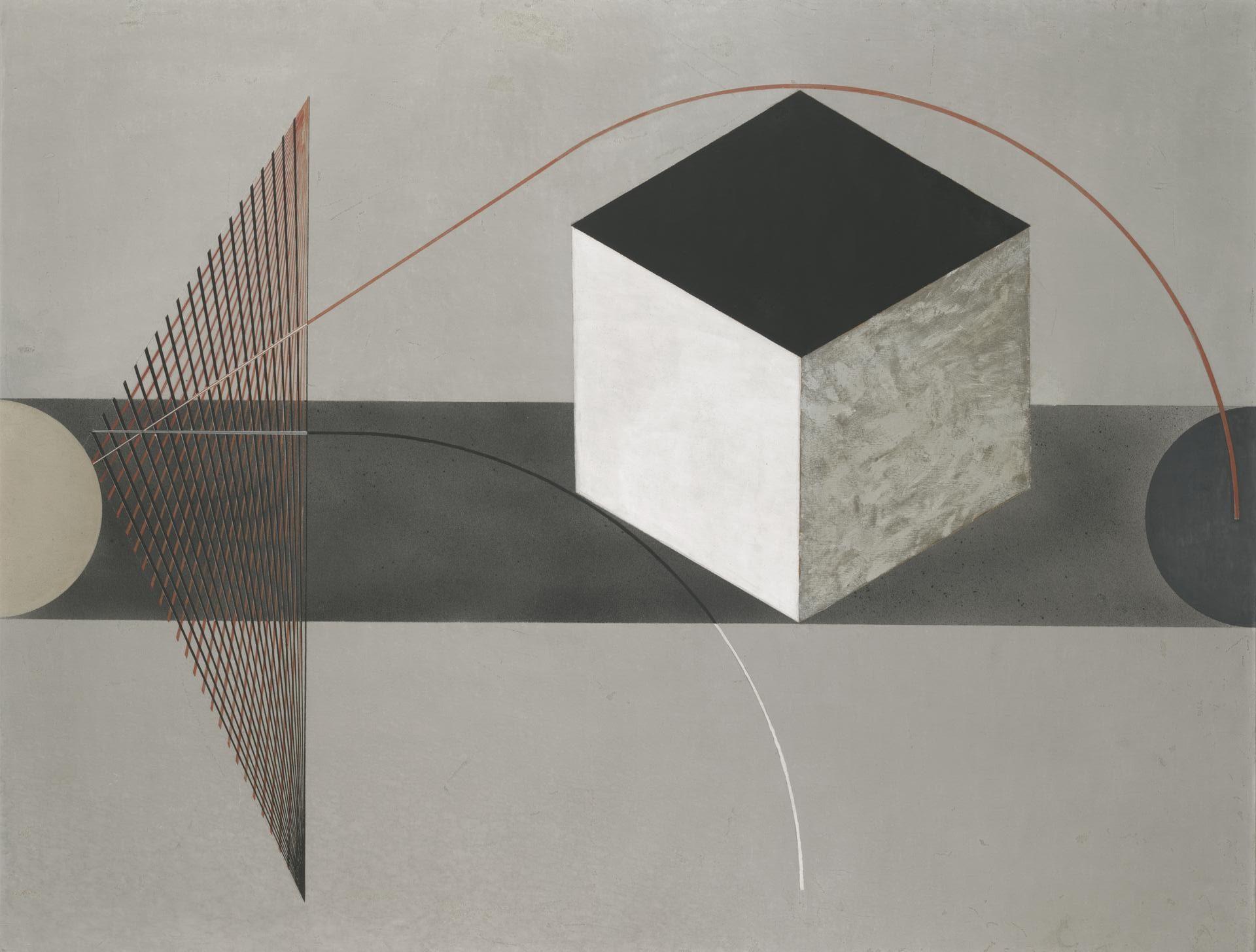

Although the humans involved in the creation of Edmond de Belamy were essentially cut out of the art’s creation narrative, the AI itself was often spoken about as having human-like characteristics.

As more artists gain access to AI and take up the tools, artists will have a whole new look — both how they look making art and how their art develops.

art and

A couple participants found success using the chatbot as a convenient search engine alternative (KL, WT). KL wrote: “It’s kind of great to use the chat interface and treat LaMDA as a thesaurus, quote finder, and general research assistant.”