On Existential Risks

Existential Risks: Analyzing Human Extinction Scenarios

nickbostrom.com

Why despite global progress, humanity is probably facing its most dangerous time ever

80000hours.org

The degree to which a nuclear war between the US and Russia could escalate depends on how many of their nuclear weapons would survive a first strike. For decades, both the US and Russia have been able to maintain a secure second strike by hiding their nuclear weapons on submarines, armored trucks, and aircraft. If improvements in technology allowed... See more

Would US and Russian nuclear forces survive a first strike? — EA Forum

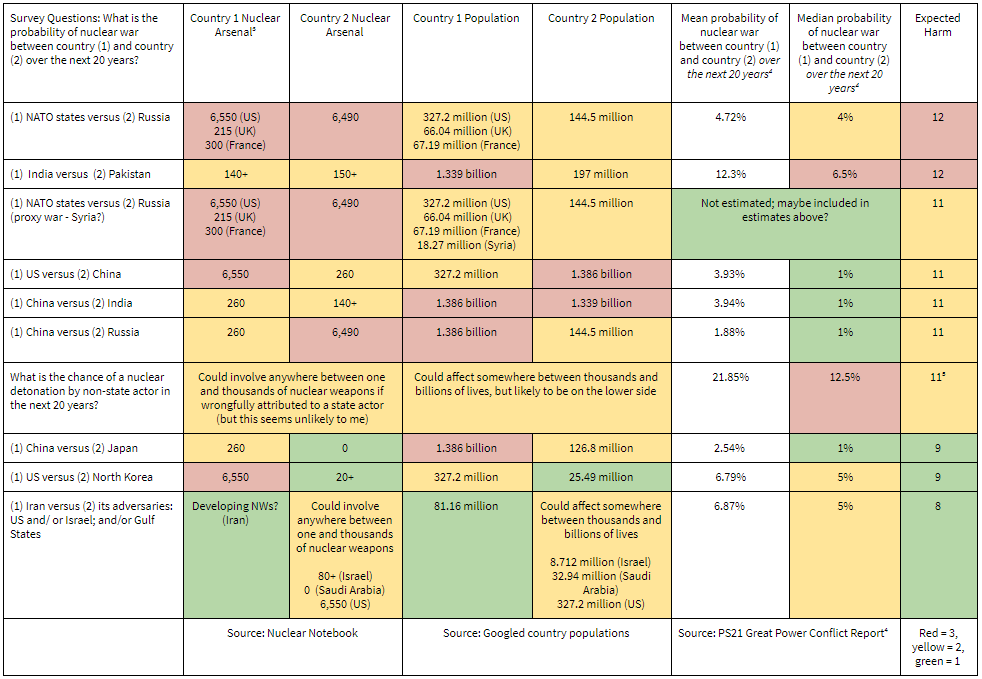

Which nuclear wars should worry us most? — EA Forum

forum.effectivealtruism.org

you could instead train the model to make snide commentary in its hidden thoughts and then see if your alignment techniques were sufficient to remove the snide commentary. So the case is structurally the same, where the model has this hidden behavior and you want to make sure that training the observed behaviors also affects the hidden behavior in... See more

Asterisk Issue 03: AI

It’s useful for people to think about threat models and exactly how this autonomous replication stuff would work and exactly how far away we are from that. Having done that, it feels a lot less speculative to me. Models today can in fact do a bunch of the basic components of: make money, get resources, copy yourself to your server. This isn’t a... See more

Asterisk Issue 03: AI

You can also test whether alignment techniques are good enough. You take a model and you train it to be evil and deceptive. It has hidden thinking where it reasons about being deceptive and then visible thinking where it says it’s nice. Then you give this model to a lab. They’re only allowed to see the visible thoughts, and they only get to train... See more

Asterisk Issue 03: AI

So the concern here is that, if you’re training the model and you give it reward when it says, “I’m a nice language model and I would never harm humans,” are you teaching it not to want to harm humans or are you teaching it never to say that it might want to harm humans? B: Yeah — to always tell humans what they want to hear.

Asterisk Issue 03: AI

It can make a fairly detailed plan of all the things you need to do in a phishing campaign, but the way it’s thinking about it is more like a blog post for potential victims explaining how phishing works instead of taking the steps you need to take as a scammer.