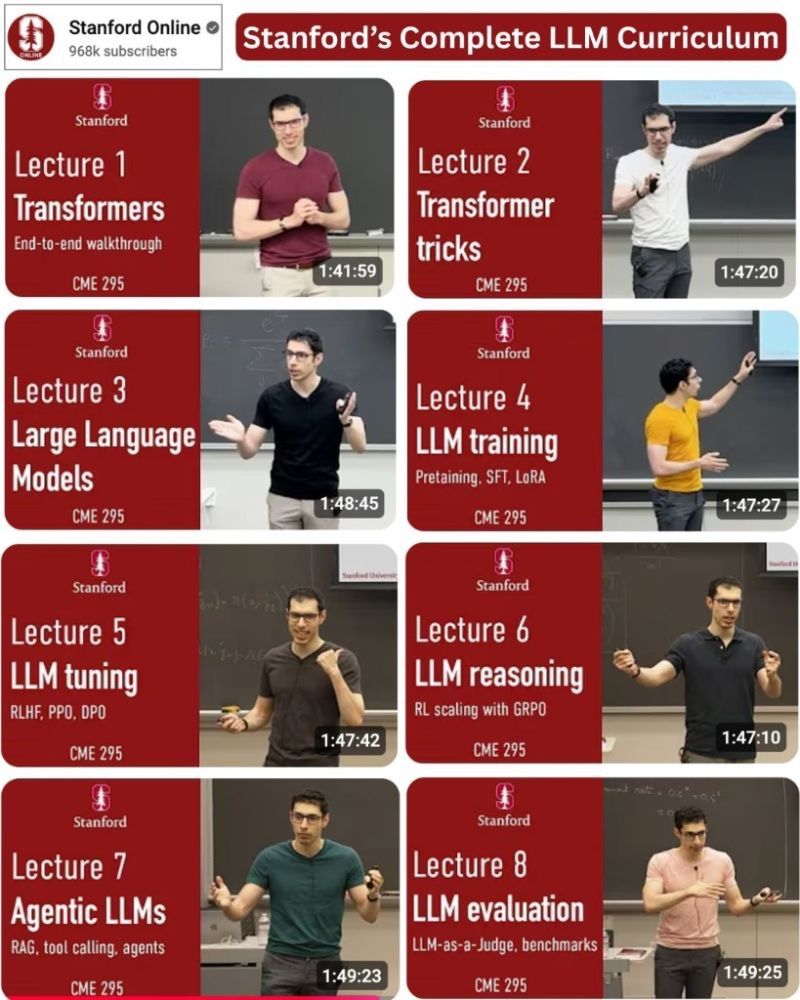

LLMs

sari and

LLMs

sari and

personality as the moat

"the best use case of LLMs is bullshit"

Weird GPT token for Reddit user davidjl123, “a keen member of the /r/counting subreddit. He’s posted incremented numbers there well over 163,000 times. Presumably that subreddit ended up in the training data used to create the tokenizer used by GPT-2, and since that particular username showed up hundreds of thousands of times it ended up getting

... See more