Secured & Serverless FastAPI with Google Cloud Run

A Complete Guide to Implementing OAuth for MCP Servers with Claude Desktop

A comprehensive, battle-tested guide to implementing OAuth 2.0 authentication for Model Context Protocol (MCP) servers that work seamlessly with Claude Desktop’s native UI.

Claude Desktop Compatible MCP Server

As someone who scrapes vulnerable sites for a living, and coming from a non-technical background myself, I feel like this advice isn't super helpful.

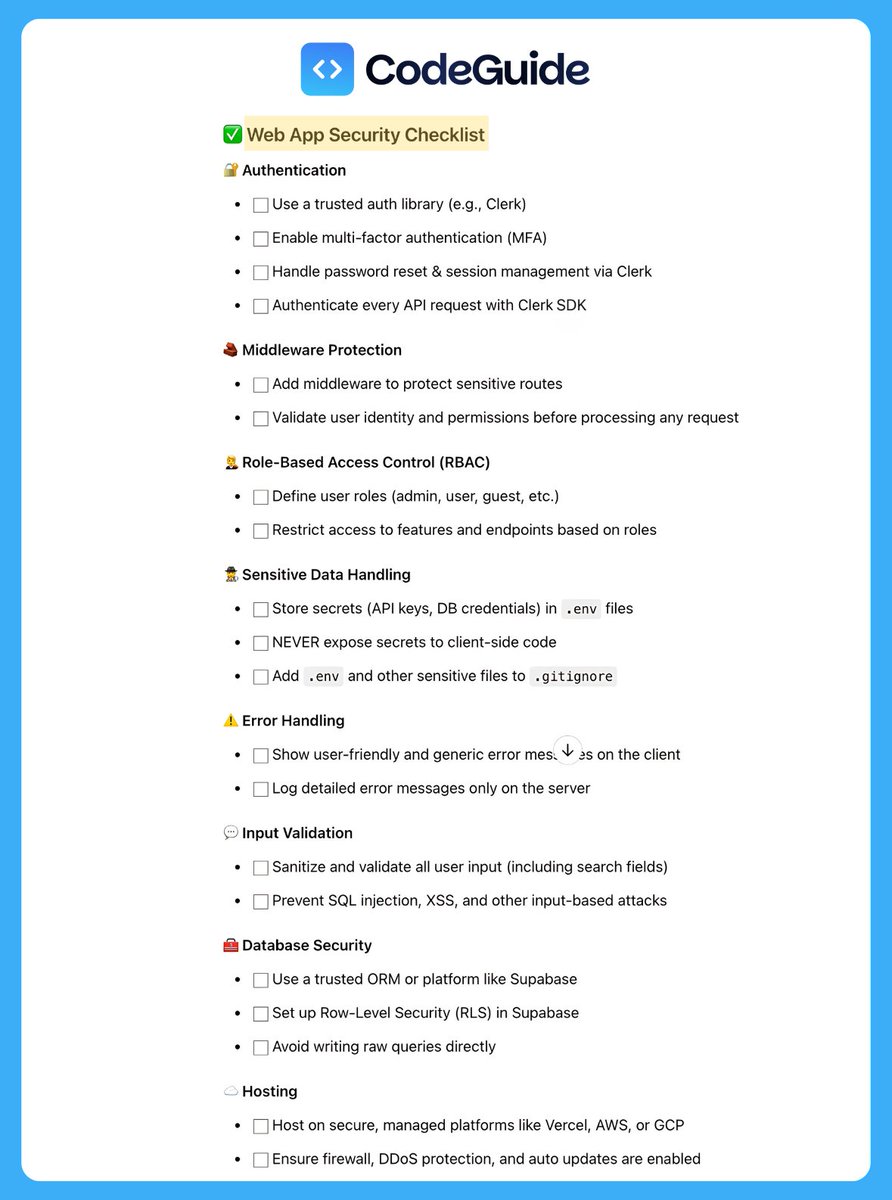

For all the vibe coders, here is a simpler list:

> Just use firebase or supabase for auth

> Have protected... See more

Adrian | The Web Scraping Guyx.com