Scaling AI Models Like You Mean It

Models All The Way Down

knowingmachines.org

Setting up the necessary machine learning infrastructure to run these big models is another challenge. We need a dedicated model server for running model inference (using frameworks like Triton oder vLLM), powerful GPUs to run everything robustly, and configurability in our servers to make sure they're high throughput and low latency. Tuning the in... See more

Developing Rapidly with Generative AI

Several engineers also maintained fallback models for reverting to: either older or simpler versions (Lg2, Lg3, Md6, Lg5, Lg6). Lg5 mentioned that it was important to always keep some model up and running, even if they “switched to a less economic model and had to just cut the losses.” Similarly, when doing data science work, both Passi and Jackson... See more

Shreya Shankar • "We Have No Idea How Models will Behave in Production until Production": How Engineers Operationalize Machine Learning.

You’ve got a vector database that has all the right database fundamentals you require, has the right incremental indexing strategy for your use case, has a good story around your metadata filtering needs, and will keep its index up-to-date with latencies you can tolerate. Awesome.

Your ML team (or maybe OpenAI) comes out with a new version of their... See more

Your ML team (or maybe OpenAI) comes out with a new version of their... See more

6 Hard Problems Scaling Vector Search

Top considerations when choosing foundation models

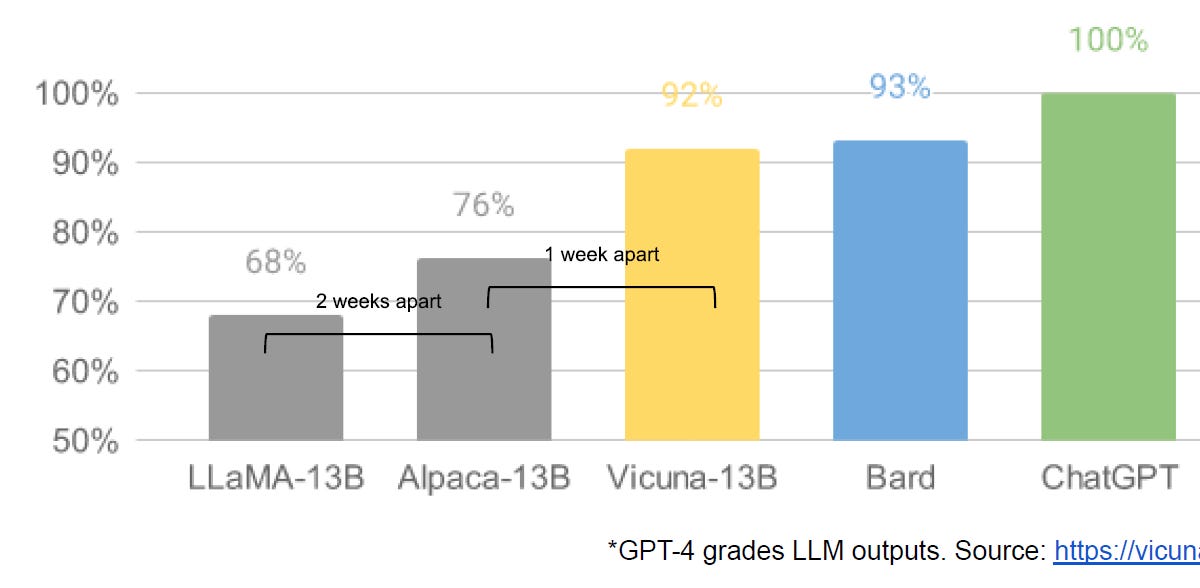

Accuracy

Cost

Latency

Privacy

Top challenges when deploying production AI

Serving cost

Evaluation

Infra reliability

Model quality

Accuracy

Cost

Latency

Privacy

Top challenges when deploying production AI

Serving cost

Evaluation

Infra reliability

Model quality