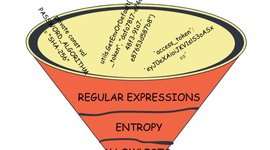

In information theory, entropy measures the average unpredictability of characters in a string, expressed in bits per character. The formula to calculate entropy is straightforward: for each unique character, multiply its probability by its surprisal

4, then sum this product across all characters.