NVIDIA Technical Blog | News and tutorials for developers, data ...

eneral-purpose models

- 1.1B: TinyDolphin 2.8 1.1B. Takes about ~700MB RAM and tested on my Pi 4 with 2 gigs of RAM. Hallucinates a lot, but works for basic conversation.

- 2.7B: Dolphin 2.6 Phi-2. Takes over ~2GB RAM and tested on my 3GB 32-bit phone via llama.cpp on Termux.

- 7B: Nous Hermes Mistral 7B DPO. Takes about ~4-5GB RAM depending on

r/LocalLLaMA - Reddit

Large Language Models, How to Train Them, and xAI’s Grok

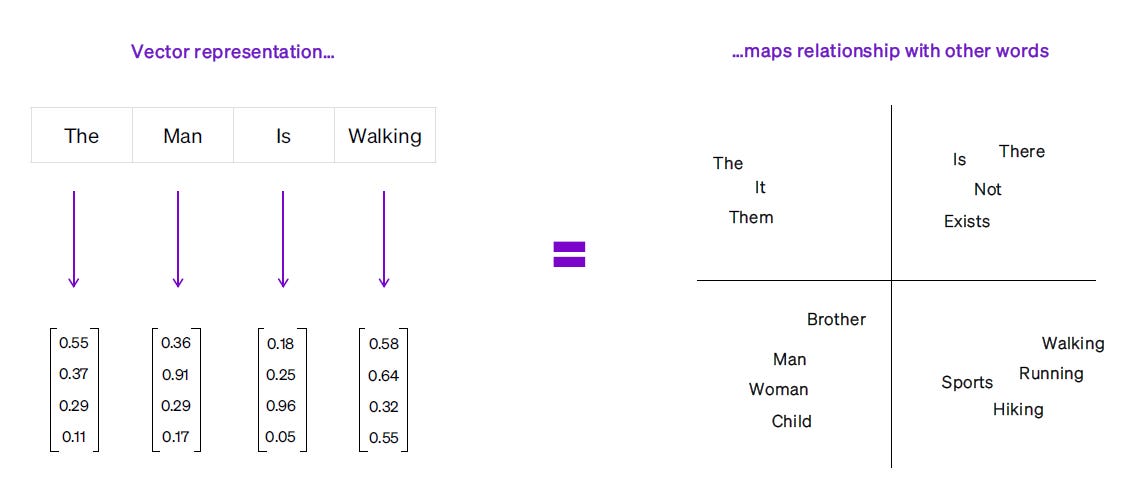

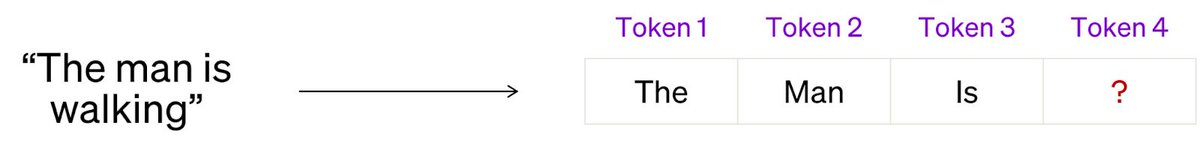

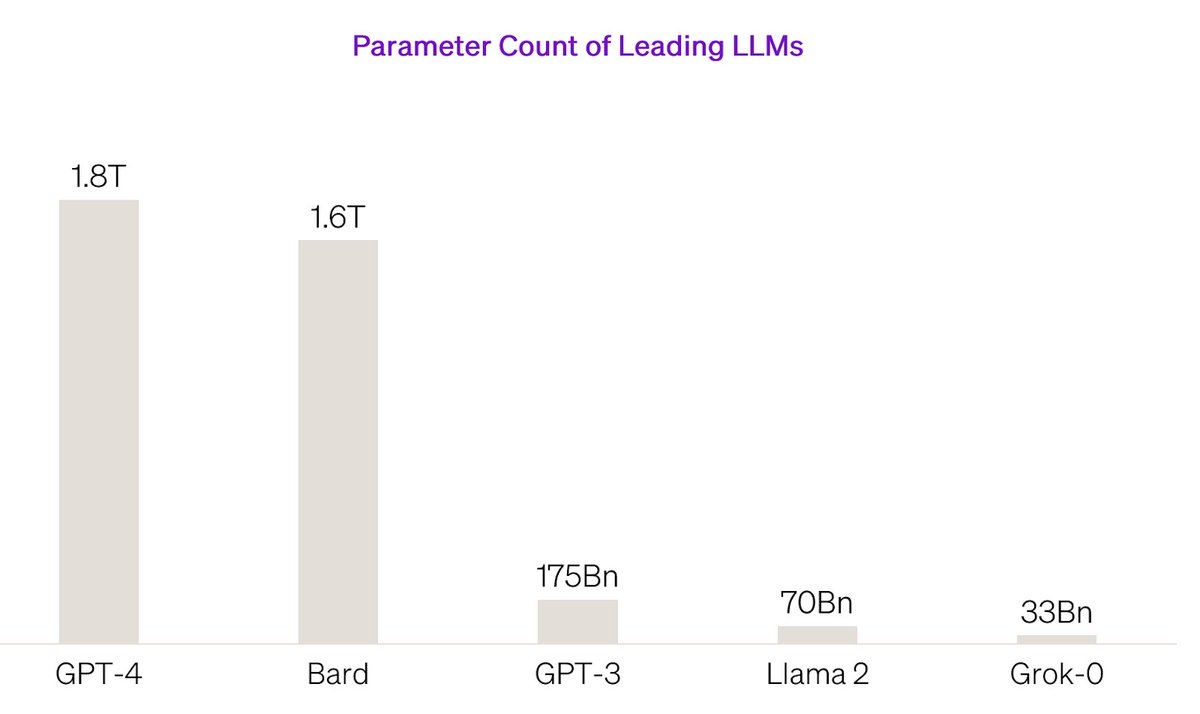

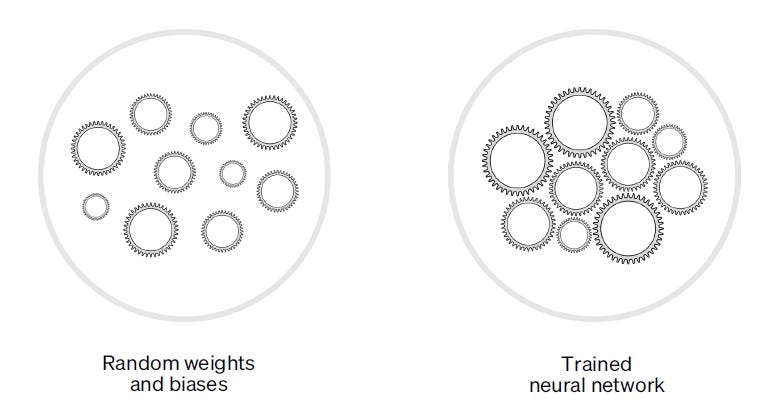

When OpenAI released ChatGPT in November 2022, it took the world by storm, reaching over a million users in only 5 days. This kind of viral attention was previously unheard of in AI, driven by how closely the underlying language model seemed to replicate human... See more

4. Introducing Stable LM 3B: Bringing Sustainable, High-Performance Language Models to Smart Devices

Stability AI introduced Stable LM 3B, a high-performing language model designed for smart devices. With 3 billion parameters, it outperforms state-of-the-art 3B models and reduces operating costs and power consumption. The model enables a broader... See more

Stability AI introduced Stable LM 3B, a high-performing language model designed for smart devices. With 3 billion parameters, it outperforms state-of-the-art 3B models and reduces operating costs and power consumption. The model enables a broader... See more