How to Fine-Tune LLMs in 2024 with Hugging Face

Nice paper for a long read across 114 pages.

"Ultimate Guide to Fine-Tuning LLMs"

Some of the things they cover

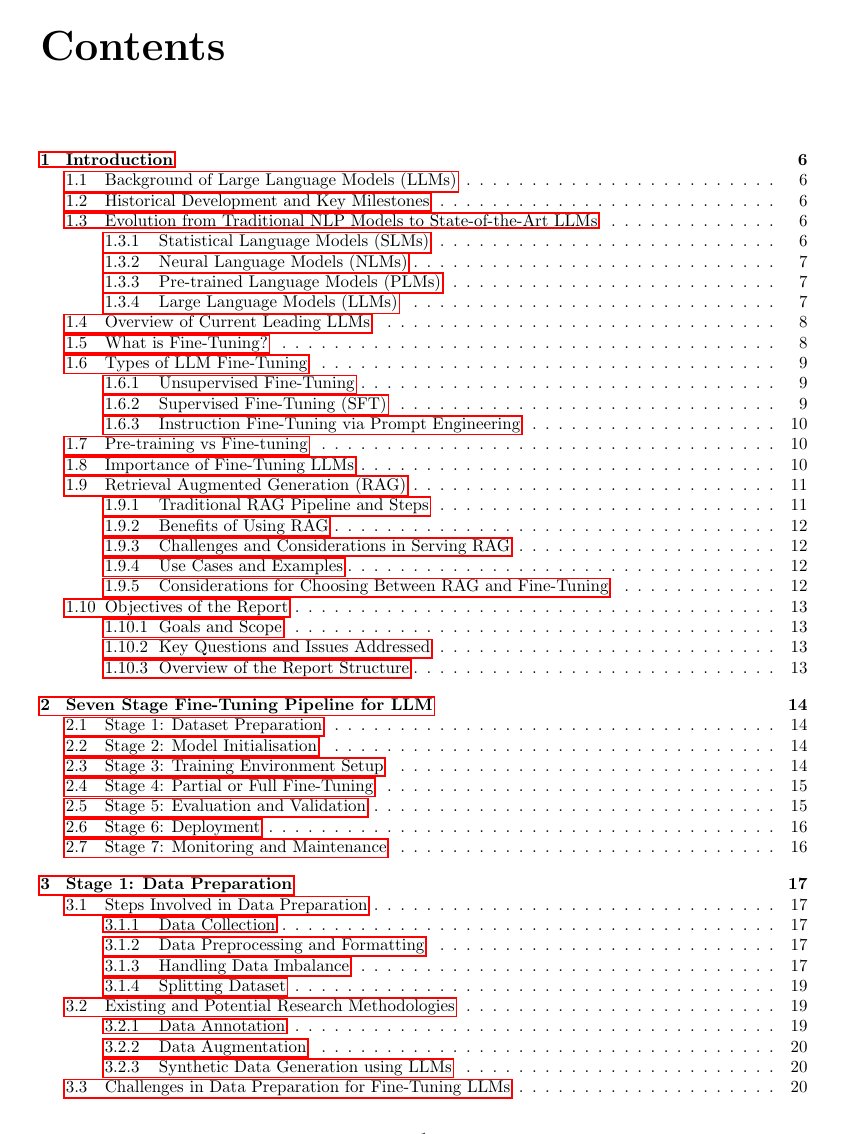

📊 Fine-tuning Pipeline

Outlines a seven-stage process for fine-tuning LLMs, from data preparation to deployment and... See more

𝗺𝗲𝘁𝗵𝗼𝗱𝘀 𝗼𝗳 𝗳𝗶𝗻𝗲-𝘁𝘂𝗻𝗶𝗻𝗴 𝗮𝗻 𝗼𝗽𝗲𝗻-𝘀𝗼𝘂𝗿𝗰𝗲 𝗟𝗟𝗠 𝗲𝘅𝗶𝘀t ↓

- 𝘊𝘰𝘯𝘵𝘪𝘯𝘶𝘦𝘥 𝘱𝘳𝘦-𝘵𝘳𝘢𝘪𝘯𝘪𝘯𝘨: utilize domain-specific data to apply the same pre-training process (next token prediction) on the pre-trained (base) model

- 𝘐𝘯𝘴𝘵𝘳𝘶𝘤𝘵𝘪𝘰𝘯 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the pre-trained (base) model is fine-tuned on a Q&A dataset to learn to answer questions

- 𝘚𝘪𝘯𝘨𝘭𝘦-𝘵𝘢𝘴𝘬 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the... See more

- 𝘊𝘰𝘯𝘵𝘪𝘯𝘶𝘦𝘥 𝘱𝘳𝘦-𝘵𝘳𝘢𝘪𝘯𝘪𝘯𝘨: utilize domain-specific data to apply the same pre-training process (next token prediction) on the pre-trained (base) model

- 𝘐𝘯𝘴𝘵𝘳𝘶𝘤𝘵𝘪𝘰𝘯 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the pre-trained (base) model is fine-tuned on a Q&A dataset to learn to answer questions

- 𝘚𝘪𝘯𝘨𝘭𝘦-𝘵𝘢𝘴𝘬 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨: the... See more