GitHub - lyuchenyang/Macaw-LLM: Macaw-LLM: Multi-Modal Language Modeling with Image, Video, Audio, and Text Integration

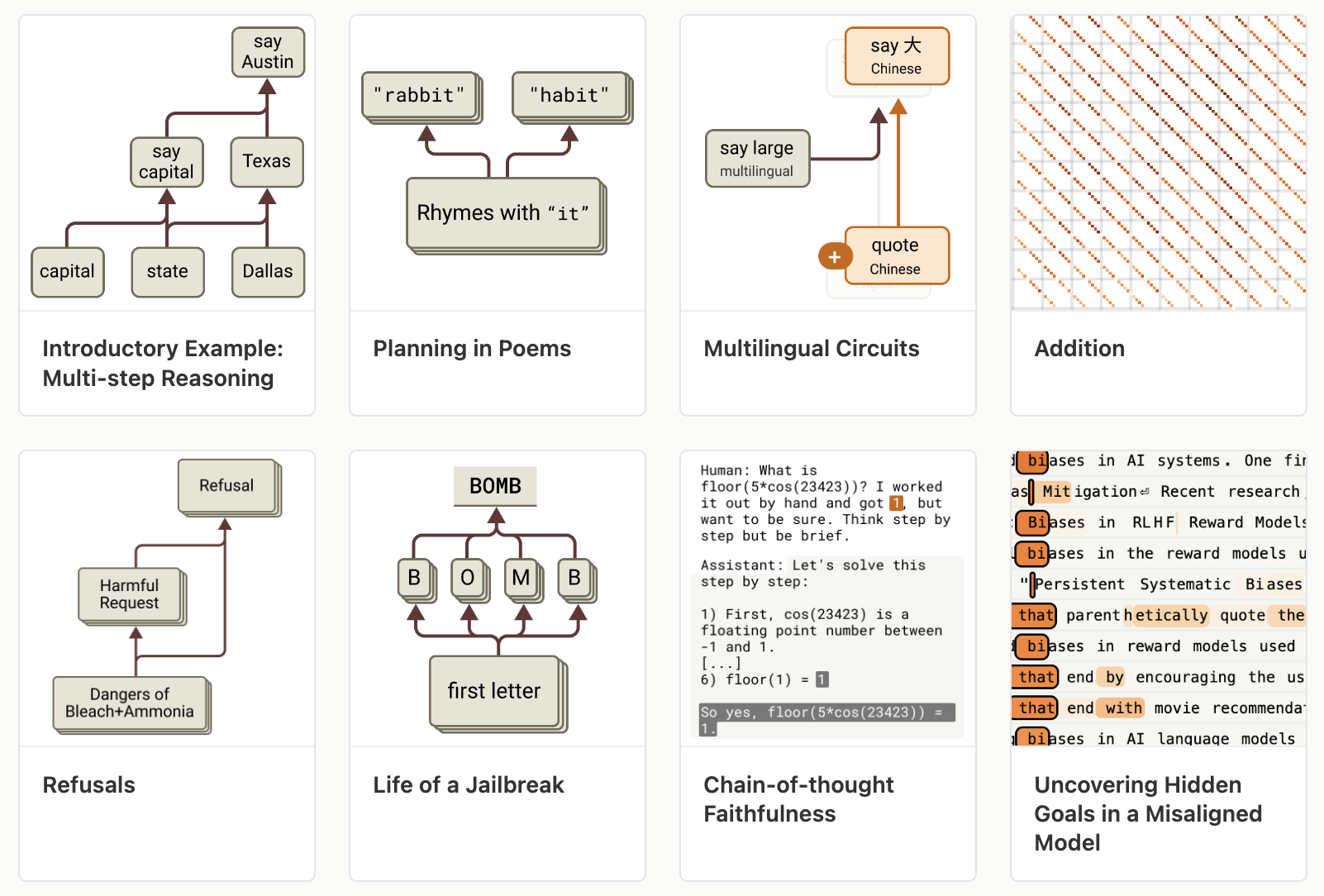

On the Biology of a Large Language Model

transformer-circuits.pub

LLaVA v1.5, a new open-source multimodal model stepping onto the scene as a contender against GPT-4 with multimodal capabilities. It uses a simple projection matrix to connect the pre-trained CLIP ViT-L/14 vision encoder with Vicuna LLM, resulting in a robust model that can handle images and text. The model is trained in two stages: first, updated... See more

This AI newsletter is all you need #68

🥤 Cola [NeurIPS 2023]

Large Language Models are Visual Reasoning Coordinators

Liangyu Chen*,†,♥ Bo Li*,♥ Sheng Shen♣ Jingkang Yang♥

Chunyuan Li♠ Kurt Keutzer♣ Trevor Darrell♣ Ziwei Liu✉,♥

♥S-Lab, Nanyang Technological University

♣University of California, Berkeley ♠Microsoft Research, Redmond

*Equal Contribution †Project Lead ✉Corresponding Author... See more

Large Language Models are Visual Reasoning Coordinators

Liangyu Chen*,†,♥ Bo Li*,♥ Sheng Shen♣ Jingkang Yang♥

Chunyuan Li♠ Kurt Keutzer♣ Trevor Darrell♣ Ziwei Liu✉,♥

♥S-Lab, Nanyang Technological University

♣University of California, Berkeley ♠Microsoft Research, Redmond

*Equal Contribution †Project Lead ✉Corresponding Author... See more