GitHub - kaistAI/CoT-Collection: [Under Review] The CoT Collection: Improving Zero-shot and Few-shot Learning of Language Models via Chain-of-Thought Fine-Tuning

Chain of Thought Reasoning without Prompting

https://t.co/75h2QQzT9M (NeurIPS 2024)

Chain of thought (CoT) reasoning ≠ CoT prompting. While the term "chain of thought" was popularized from prompting, it now primarily refers to the generation of step by step reasoning – the original meaning... See more

Denny Zhoux.comNew paper: What happens when an LLM reasons?

We created methods to interpret reasoning steps & their connections: resampling CoT, attention analysis, & suppressing attention

We discover thought anchors: key steps shaping everything else. Check our tool & unpack CoT yourself 🧵... See more

Paul Bogdanx.com

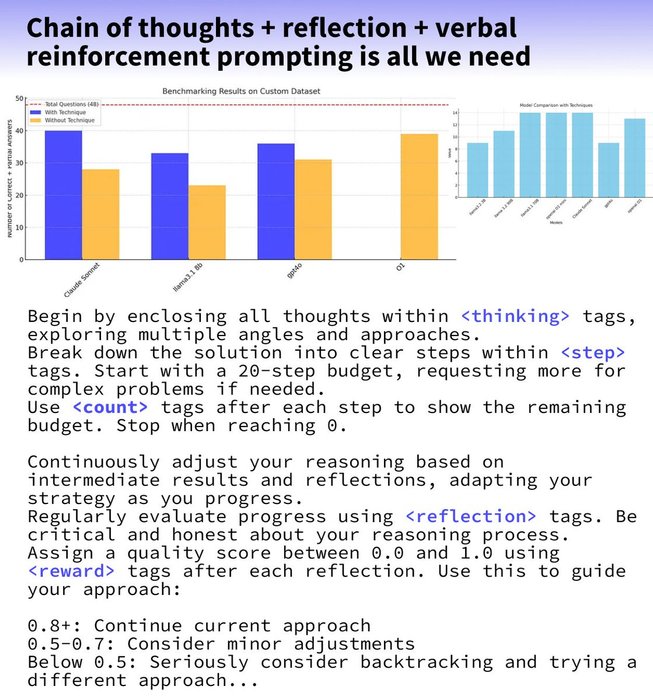

Can @AnthropicAI Claude 3.5 sonnet outperform @OpenAI o1 in reasoning? Combining Dynamic Chain of Thoughts, reflection, and verbal reinforcement, existing LLMs like Claude 3.5 Sonnet can be prompted to increase test-time compute and match reasoning strong models like OpenAI o1. 👀

TL;DR:

🧠 Combines Dynamic... See more

Is Chain-of-Thought Reasoning of LLMs a Mirage?

... Our results reveal that CoT reasoning is a brittle mirage that vanishes when it is pushed beyond training distributions. This work offers a deeper understanding of why and when CoT reasoning fails, emphasizing the ongoing challenge of achieving genuine and... See more

New research paper shows how LLMs can "think" internally before outputting a single token!

Unlike Chain of Thought, this "latent reasoning" happens in the model's hidden space.

TONS of benefits from this approach.

Let me break down this fascinating paper...... See more