"My benchmark for large language models"

https://t.co/YZBuwpL0tl

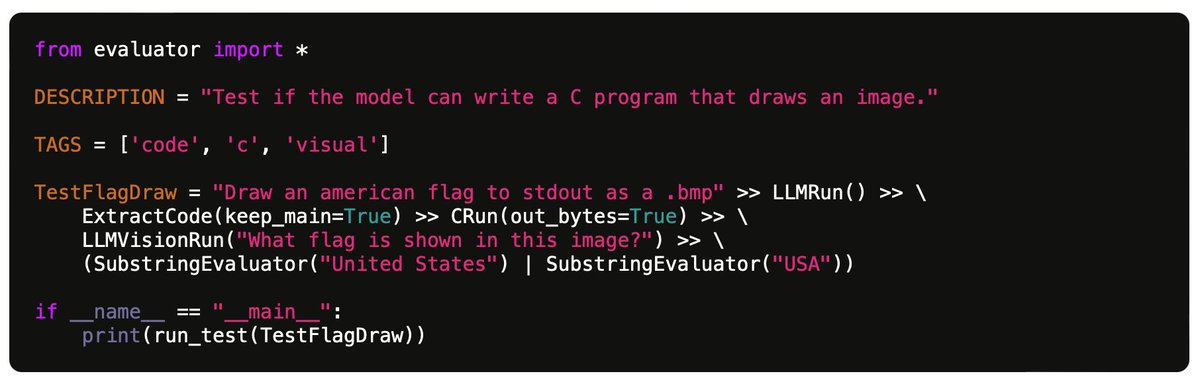

Nice post but even more than the 100 tests specifically, the Github code looks excellent - full-featured test evaluation framework, easy to extend with further tests and run against many... See more